How to Make AI-Generated Text Undetectable

In today’s digital era, artificial intelligence (AI) has revolutionized various aspects of our lives, including content creation. AI-generated text has become increasingly prevalent, enabling businesses and individuals to streamline their content creation processes. However, as AI continues to advance, the challenge of making AI-generated text indistinguishable from human-written content arises. In this article, we will delve into the techniques and strategies to make AI-generated text undetectable, ensuring a seamless and authentic experience for readers.

Understanding the Importance of Authenticity:

In an age where trust and credibility play a vital role, maintaining authenticity in content is paramount. Readers value content that feels genuine, relatable, and human-authored. Therefore, it’s essential to ensure that AI-generated text aligns with these expectations. By employing specific tactics, we can bridge the gap between AI and human-generated content, ultimately making the text indistinguishable.

Enhancing Natural Language Processing (NLP) Capabilities:

Natural Language Processing (NLP) forms the foundation of AI-generated text. By leveraging advanced NLP models, we can enhance the quality and coherence of AI-generated content. Techniques such as fine-tuning pre-trained language models, optimizing hyperparameters, and using larger datasets can significantly improve the naturalness and fluency of the generated text. These measures help to eliminate common pitfalls like grammatical errors, awkward phrasing, and robotic language.

Incorporating Human Editing and Review:

While AI can generate impressive content, human input remains invaluable. The collaboration between AI and human editors can result in seamless text that seamlessly blends the strengths of both. Human editing can refine and polish AI-generated content, ensuring it aligns with the desired tone, style, and voice. Professional editors can add a touch of creativity, inject personality, and ensure the text resonates with the target audience, making it virtually indistinguishable from human-written content.

Contextualizing and Personalizing the Content:

One of the key challenges with AI-generated text is capturing the nuances of specific contexts and tailoring content to individual preferences. By employing contextualization techniques, such as incorporating relevant information and addressing specific user queries, AI-generated text can feel personalized and relevant. This personalized touch helps to create a seamless reading experience, making it difficult for readers to discern whether the text was generated by AI or written by a human.

Ensuring Ethical and Responsible Use of AI:

As we explore the potential of AI-generated text, it is crucial to emphasize ethical and responsible practices. Transparency regarding the use of AI-generated content should be maintained. Disclosures or disclaimers can help establish trust with readers, ensuring they are aware of the involvement of AI in content creation. By adhering to ethical guidelines and promoting transparency, we can build credibility and foster long-term relationships with our audience.

Related: How To Write Good Prompts For Midjourney

Understanding AI-Generated Text: Bridging the Gap between Human and Machine

- Understanding AI-Generated Text: Bridging the Gap between Human and Machine

- The Challenges of Detecting AI-Generated Text: Unveiling the Digital Disguise

- Techniques for Making AI-Generated Text Undetectable: Blurring the Lines of Authenticity

- Multi-Model Ensembles and Hybrid Approaches:

- Natural Language Processing (NLP) in AI-Generated Text: Unleashing the Power of Language Understanding

- Ethics and Implications of Undetectable AI-Generated Text: Navigating the Moral and Societal Challenges

- Tools and Software for Creating Undetectable AI-Generated Text: Exploring the Capabilities and Challenges

-

Applications and Use Cases for Undetectable AI-Generated Text: Expanding Possibilities and Impact

- Content Generation and Automation:

- Virtual Assistants and Chatbots:

- Language Translation and Localization:

- Personalized Content Recommendations:

- Creative Writing and Storytelling:

- Natural Language Interfaces and Voice Assistants:

- Accessibility and Assistive Technologies:

- Language Learning and Education:

- The Future of AI-Generated Text and Detection Methods: Anticipating Challenges and Advancements

- Ethical Considerations and Regulations for AI-Generated Text: Balancing Innovation and Responsibility

- Conclusion

- FAQs

In the digital age, artificial intelligence (AI) has made remarkable strides in various fields, including content creation. AI-generated text has become a powerful tool that enables businesses and individuals to produce vast amounts of content efficiently. However, to harness the full potential of AI-generated text, it is crucial to understand its capabilities, limitations, and how it differs from human-written content.

The Power of Language Models:

At the core of AI-generated text lies the power of language models. These models are trained on vast amounts of text data, allowing them to learn the patterns, structures, and context of human language. By leveraging deep learning algorithms, language models can generate text that mimics human-like fluency and coherence. They excel at tasks like generating product descriptions, news articles, or even creative writing pieces.

Limitations and Challenges:

While AI-generated text has come a long way, it still faces certain limitations and challenges. One of the main challenges is the lack of contextual understanding. AI models are excellent at generating text based on patterns in the training data, but they may struggle to grasp the underlying meaning or context of the content. This can result in occasional inaccuracies or nonsensical outputs. Additionally, AI models may lack the creativity, intuition, and emotional intelligence that humans bring to their writing.

The Importance of Training Data:

The quality and diversity of the training data play a vital role in the performance of AI-generated text. Training models on comprehensive and representative datasets can enhance their ability to generate coherent and contextually appropriate content. However, biases present in the training data can also be propagated into the generated text. It is essential to carefully curate and preprocess the training data to mitigate bias and ensure fairness in AI-generated content.

Fine-Tuning and Customization:

To bridge the gap between AI and human-written content, fine-tuning and customization techniques can be employed. Fine-tuning involves training a pre-existing language model on domain-specific data to make it more accurate and contextually relevant for specific industries or topics. Customization allows users to guide the AI model’s output by conditioning it on specific prompts, styles, or guidelines, resulting in text that aligns more closely with human expectations.

Evaluating and Iterating:

To ensure the quality and authenticity of AI-generated text, evaluation and iteration are crucial. Establishing evaluation metrics and human-in-the-loop feedback systems can help identify and correct any discrepancies or issues in the generated content. By continuously refining the model based on user feedback and real-world performance, the quality of AI-generated text can be improved, making it more indistinguishable from human-written content.

By understanding the capabilities, limitations, and nuances of AI-generated text, we can leverage its potential while maintaining authenticity. The power of AI lies in its ability to automate and streamline content creation, but it is essential to strike a balance between efficiency and the human touch. As AI technology continues to advance, it is an exciting time to explore the possibilities and push the boundaries of what AI-generated text can achieve.

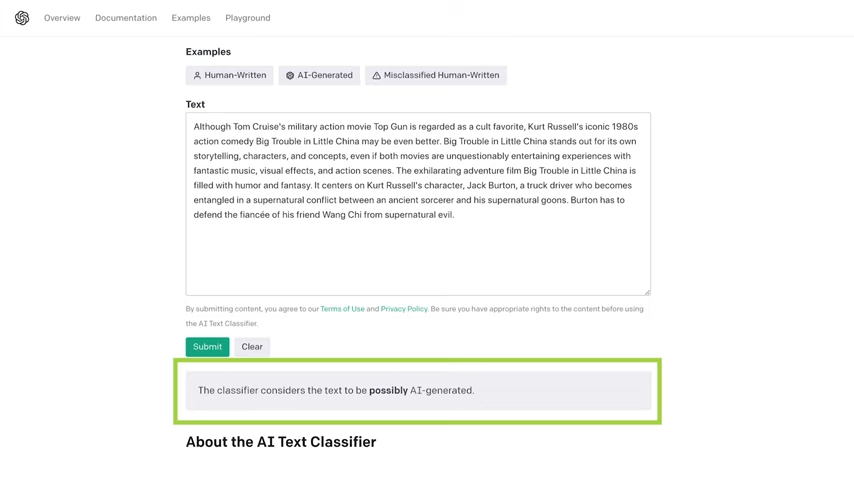

The Challenges of Detecting AI-Generated Text: Unveiling the Digital Disguise

As AI-generated text becomes more sophisticated, the challenge of detecting its origin becomes increasingly difficult. Distinguishing between human-written and AI-generated content has become a pressing concern, especially in areas where authenticity and trust are crucial. Let’s explore the key challenges associated with detecting AI-generated text.

Evolving AI Models:

AI models continuously evolve and improve, making it harder to detect AI-generated text through traditional methods. As newer models are developed and trained on vast amounts of data, they become more adept at mimicking human language patterns, grammar, and context. This evolution presents a constant challenge for detection methods, as they need to keep up with the ever-changing capabilities of AI.

Naturalness and Coherence:

AI models have made significant strides in generating text that appears natural and coherent. They can produce content that flows smoothly, uses proper grammar, and stays consistent within a given context. This makes it challenging to identify AI-generated text based solely on linguistic cues. The ability of AI models to mimic human writing styles and nuances makes detection even more complex.

Data Diversity and Bias:

AI models heavily rely on the data they are trained on, and biases present in the training data can influence the generated content. Biased training data can lead to biased or skewed outputs, making it challenging to differentiate between human and AI-generated text solely based on content analysis. Detecting bias in AI-generated text requires a comprehensive understanding of the underlying biases in the training data and the ability to analyze the context in which the text is generated.

Human-AI Collaboration:

The collaborative nature of human-AI content creation further complicates the detection of AI-generated text. Human editors often refine and polish AI-generated content, making it more challenging to distinguish between the contributions of AI and humans. While the goal is to create seamless and authentic content, it poses a challenge in detecting the specific sections or instances where AI was involved.

Adversarial Techniques:

The cat-and-mouse game between AI-generated text and detection methods has led to the development of adversarial techniques. Adversarial attacks aim to deceive detection algorithms by manipulating the text in subtle ways that are imperceptible to human readers but can confuse automated detection systems. Adversarial techniques make it increasingly difficult to rely solely on automated detection methods, necessitating a multi-faceted approach to identifying AI-generated text.

Transparency and Disclosure:

In an era where transparency and disclosure are paramount, the challenge lies in ensuring that AI-generated text is appropriately labeled and disclosed. Users have the right to know when they are consuming content generated by AI systems. However, without proper disclosure, distinguishing between human-written and AI-generated content becomes even more challenging for readers.

Addressing the challenges of detecting AI-generated text requires a multi-pronged approach involving advancements in detection techniques, continuous monitoring, and improved transparency in content creation processes. By staying vigilant and adapting to the evolving landscape of AI, we can work towards building trust, maintaining authenticity, and ensuring responsible use of AI-generated text in various domains.

Techniques for Making AI-Generated Text Undetectable: Blurring the Lines of Authenticity

As AI-generated text becomes more prevalent, the need for making it indistinguishable from human-written content has grown. To achieve this seamless integration, several techniques can be employed to enhance the quality, coherence, and authenticity of AI-generated text. Let’s explore some key strategies for making AI-generated text undetectable.

Contextual Understanding and Generation:

One of the primary challenges in making AI-generated text appear human-like is ensuring that it understands and generates content in the appropriate context. AI models should be trained on diverse and representative datasets that cover a wide range of topics, styles, and genres. This helps the models grasp the nuances and intricacies of different contexts, resulting in more contextually appropriate and authentic text.

Emulating Writing Styles and Voice:

To make AI-generated text indistinguishable, it is crucial to capture the unique writing styles and voices associated with different authors or content creators. AI models can be fine-tuned or trained specifically on the writing styles of human authors, allowing them to mimic their tone, language patterns, and even idiosyncrasies. This technique helps create a seamless transition between human and AI-generated content within the same document or platform.

Iterative Refinement and Human Feedback: feedback plays a vital role in refining AI-generated text. By incorporating iterative feedback loops, human editors, or subject matter experts can review and provide input on the AI-generated content. This collaborative process helps identify and correct any inconsistencies, errors, or deviations from desired quality standards. Continuous refinement based on human feedback improves the authenticity and overall quality of the generated text.

Incorporating Imperfections and Variability:

Human writing is not perfect, and AI-generated text should reflect that imperfection to appear more genuine. Introducing subtle variations, occasional grammatical errors, or minor deviations from perfect language can make the text feel more human-like. Striving for flawless text may actually raise suspicions, as humans naturally exhibit some degree of variability in their writing.

Ethical Use and Disclosure:

Maintaining ethical practices and ensuring transparency in the use of AI-generated text is paramount. Clearly disclosing when content is generated by AI helps establish trust with readers. Ethical guidelines should be followed to prevent the misuse of AI-generated text for deceptive or malicious purposes. By adhering to responsible practices and promoting transparency, we can build trust and credibility with our audience.

Multi-Model Ensembles and Hybrid Approaches:

Combining multiple AI models or employing hybrid approaches that integrate AI-generated and human-authored content can enhance the overall authenticity of the text. By leveraging the strengths of different models or blending human and AI contributions, we can create a seamless and harmonious content experience that is dynamic and engaging.

Natural Language Processing (NLP) in AI-Generated Text: Unleashing the Power of Language Understanding

Natural Language Processing (NLP) plays a crucial role in the development and enhancement of AI-generated text. NLP techniques enable AI models to understand, interpret, and generate human language, making it possible to create more coherent, contextually relevant, and authentic text. Let’s delve into the key aspects of NLP in AI-generated text.

Language Understanding:

NLP techniques form the foundation for language understanding in AI-generated text. Through processes like tokenization, part-of-speech tagging, and syntactic parsing, AI models can break down text into meaningful units, identify grammatical structures, and extract semantic information. This understanding of language enables AI models to generate text that adheres to grammar rules and maintains contextual coherence.

Sentiment Analysis and Emotion Detection:

NLP techniques also facilitate sentiment analysis and emotion detection in AI-generated text. By analyzing the tone, sentiment, and emotional cues present in the text, AI models can generate content that aligns with specific emotional contexts. This capability allows for the creation of text that conveys the desired sentiment, whether it is informative, persuasive, or evokes a particular emotional response.

Language Generation:

NLP techniques are instrumental in the language generation process for AI models. These techniques enable AI models to generate text that is fluent, coherent, and contextually appropriate. Language models, such as recurrent neural networks (RNNs) and transformer models like GPT, leverage NLP techniques to learn patterns, structures, and context from vast amounts of text data. This learning enables AI models to generate text that mimics human-like fluency and coherence.

Named Entity Recognition (NER):

NER is a crucial NLP task that identifies and classifies named entities in text, such as names of people, organizations, locations, and dates. AI models can leverage NER techniques to generate text that accurately incorporates relevant named entities. This enhances the contextual relevance and accuracy of AI-generated text, particularly in domains where precise information and entity recognition are vital.

Machine Translation and Language Adaptation:

NLP techniques are extensively used in machine translation, enabling AI models to translate text from one language to another. By leveraging techniques such as sequence-to-sequence models and attention mechanisms, AI models can generate translations that preserve the meaning and context of the original text. NLP techniques also facilitate language adaptation, allowing AI models to generate text in multiple languages or adapt their language style to specific domains or target audiences.

Contextual Word Embeddings:

Word embeddings, such as Word2Vec and GloVe, are NLP techniques that represent words as dense vectors in a high-dimensional space. These embeddings capture semantic relationships between words, enabling AI models to understand the meaning and context of words in text. Contextual word embeddings, like those produced by BERT or GPT-based models, take into account the surrounding context of each word, further enhancing the understanding and generation of AI-generated text.

NLP plays a pivotal role in AI-generated text, enabling models to understand, generate, and adapt human language. Through language understanding, sentiment analysis, language generation, named entity recognition, machine translation, and contextual word embeddings, NLP techniques empower AI models to create text that is coherent, contextually relevant, and aligned with the desired language style and tone. As NLP continues to advance, AI-generated text will become increasingly indistinguishable from human-written content, revolutionizing content creation in numerous domains.

Related: How To Use Otter AI

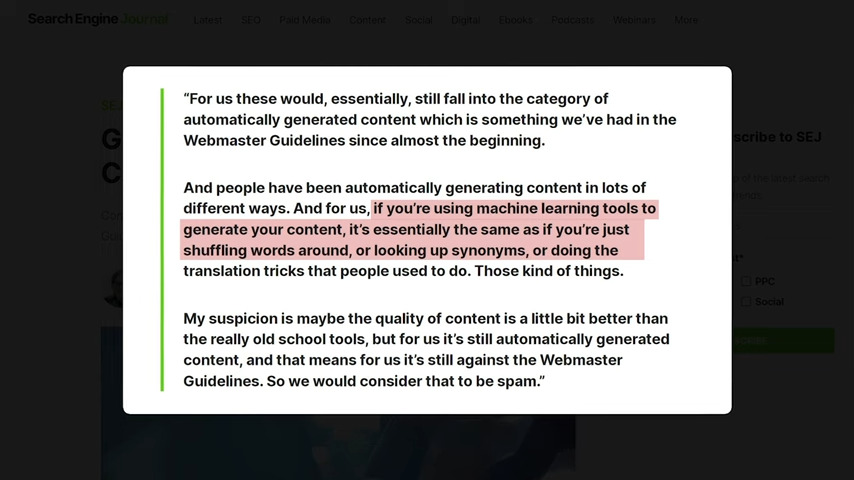

The development and use of undetectable AI-generated text raise significant ethical concerns and have far-reaching implications in various domains. It is crucial to examine the potential consequences and address the ethical considerations associated with the creation and dissemination of such content. Let’s explore the key ethical implications of undetectable AI-generated text.

Misinformation and Manipulation:

Undetectable AI-generated text can be used to spread misinformation, disinformation, and propaganda at an unprecedented scale. Malicious actors could exploit this technology to manipulate public opinion, deceive individuals, or spread false narratives. The ability to generate highly convincing and contextually appropriate text poses a significant risk to the integrity of information ecosystems and democratic processes.

Trust and Authenticity:

Undetectable AI-generated text challenges our ability to trust the authenticity of information. If AI-generated content is indistinguishable from human-written text, it becomes increasingly difficult for individuals to determine what is real and what is artificially generated. This erosion of trust can have severe societal consequences, affecting domains such as journalism, academia, and legal proceedings that rely on the authenticity and credibility of written content.

Intellectual Property and Plagiarism:

Undetectable AI-generated text raises concerns around intellectual property and plagiarism. If AI models can flawlessly mimic writing styles, there is a risk of unauthorized replication of copyrighted content. This can undermine the rights and livelihoods of content creators and authors who rely on their original work for recognition and compensation.

Consent and Privacy:

The generation of undetectable AI-generated text can raise issues of consent and privacy. AI models may use personal data, online profiles, or publicly available information to generate contextually appropriate content. Without proper consent and awareness, individuals may find their personal information being used to create content that they did not authorize or are unaware of, potentially violating their privacy rights.

Legal and Regulatory Challenges:

The emergence of undetectable AI-generated text poses legal and regulatory challenges. Current laws and regulations may not be equipped to address the complexities and potential harms associated with AI-generated content. Establishing guidelines and frameworks that govern the responsible use, disclosure, and labeling of AI-generated text becomes essential to protect individuals and maintain the integrity of information.

Digital Forensics and Verification:

As AI-generated text becomes increasingly sophisticated, traditional methods of digital forensics and verification may struggle to identify manipulated or AI-generated content. This poses challenges for fact-checking organizations, journalists, and researchers in their efforts to verify the authenticity and accuracy of information. Developing advanced tools and techniques that can detect AI-generated text becomes crucial to maintain the integrity of information sources.

Addressing the ethical implications of undetectable AI-generated text requires a multi-stakeholder approach involving researchers, policymakers, industry leaders, and society at large. It is essential to establish ethical guidelines, promote transparency, and ensure responsible use of AI-generated content. Developing robust detection methods, educating the public about the capabilities and limitations of AI, and fostering critical thinking skills are crucial to navigate the ethical challenges posed by undetectable AI-generated text. By doing so, we can mitigate the potential risks and promote the responsible and beneficial use of AI in the realm of text generation.

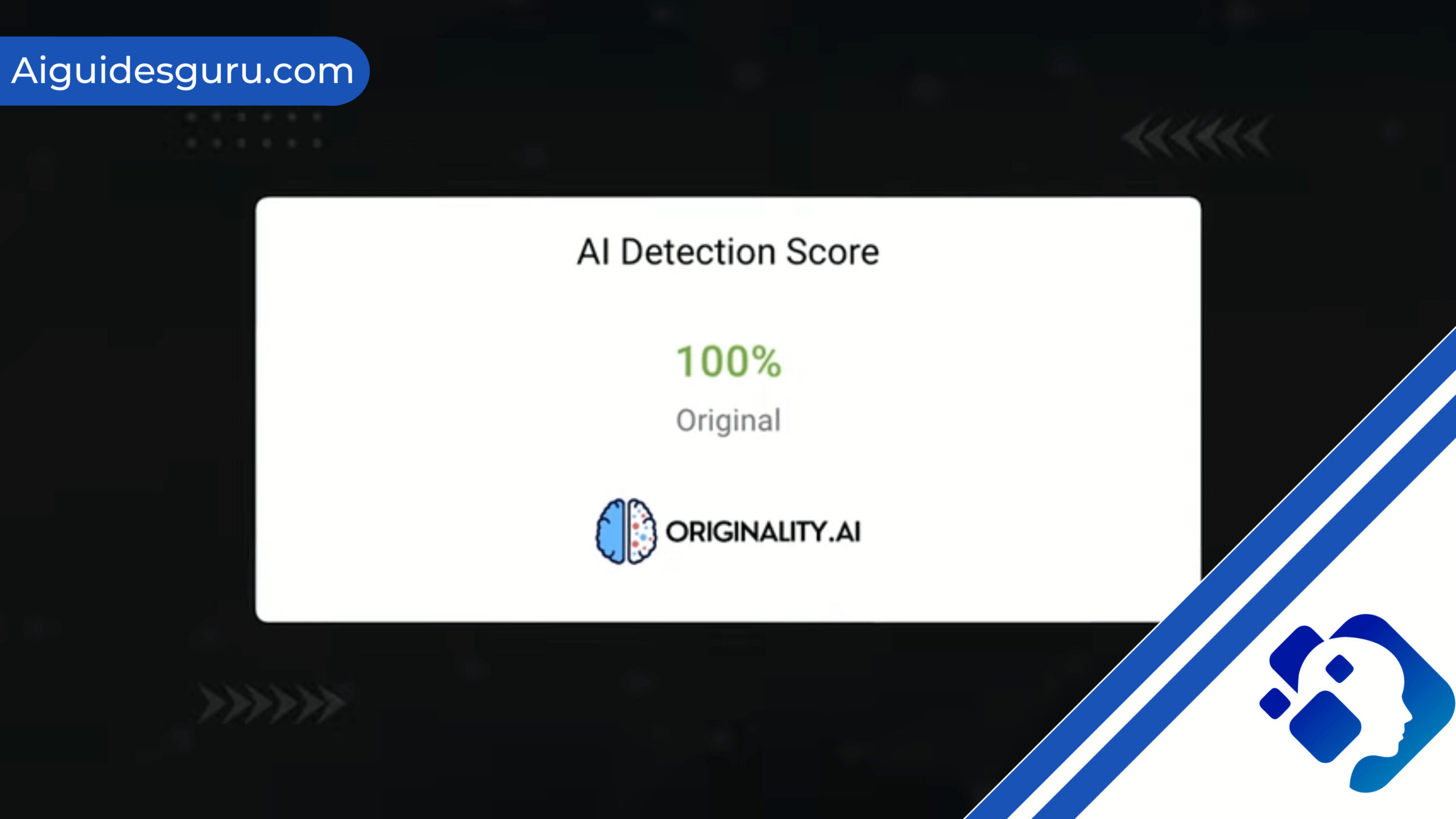

Tools and Software for Creating Undetectable AI-Generated Text: Exploring the Capabilities and Challenges

The development of undetectable AI-generated text has been facilitated by various tools and software that leverage advanced machine learning techniques. While these tools offer powerful capabilities in generating human-like text, it is important to recognize the ethical considerations and potential challenges associated with their use. Let’s explore some of the key tools and software used for creating undetectable AI-generated text.

Large Language Models:

Large language models, such as OpenAI’s GPT (Generative Pre-trained Transformer) series, have been at the forefront of AI-generated text. These models are trained on vast amounts of text data to learn patterns, context, and language structures. They can generate text that is coherent, contextually relevant, and often indistinguishable from human-written content.

Pre-trained Models and APIs:

Many organizations provide pre-trained language models and APIs that allow users to generate AI-generated text quickly. These models can be fine-tuned on specific domains or customized to mimic specific writing styles. Developers can integrate these models into their applications or platforms to generate text that aligns with their requirements.

Text Generation Libraries:

There are several text generation libraries and frameworks, such as Hugging Face’s Transformers library, that provide a range of pre-trained models and tools for generating AI-generated text. These libraries offer APIs, command-line interfaces (CLIs), and programming interfaces that facilitate text generation tasks.

Reinforcement Learning:

Reinforcement learning techniques can be employed to train AI models to generate undetectable text. By rewarding models that produce more authentic and human-like text, reinforcement learning algorithms can guide the training process and improve the quality of generated content.

Domain-Specific Training:

Training AI models on domain-specific datasets can enhance the generation of contextually appropriate text. By fine-tuning models on specific topics, industries, or writing styles, the generated text can closely match the requirements of a particular domain or audience.

Collaborative Feedback Loops:

Incorporating human feedback and iterative refinement processes can improve the quality and authenticity of AI-generated text. Tools and software that facilitate collaborative feedback loops allow human reviewers or subject matter experts to review and provide input on the generated content, helping to identify and correct any inconsistencies or errors.

While these tools and software offer powerful capabilities, it is important to consider the ethical implications and potential challenges associated with undetectable AI-generated text. Responsible use, transparency, and clear disclosure are essential to maintain trust and integrity. Guidelines and regulations should be established to prevent misuse and ensure the ethical application of AI-generated text.

Moreover, as the development of undetectable AI-generated text progresses, it is crucial to simultaneously invest in tools and techniques that can detect and verify the authenticity of AI-generated content. This will help address the challenges of misinformation and deception that may arise from the use of such tools.

Applications and Use Cases for Undetectable AI-Generated Text: Expanding Possibilities and Impact

The emergence of undetectable AI-generated text has opened up a wide range of applications and use cases across various domains. The ability to generate text that closely resembles human-written content has the potential to revolutionize industries, enhance productivity, and enable new possibilities. Let’s explore some of the key applications and use cases for undetectable AI-generated text.

Content Generation and Automation:

Undetectable AI-generated text can be leveraged to automate content generation tasks. It can create blog posts, articles, product descriptions, and social media content at scale. This automation can significantly reduce the time and effort required for content creation, enabling organizations to generate a large volume of high-quality content efficiently.

Virtual Assistants and Chatbots:

AI-generated text enhances the conversational capabilities of virtual assistants and chatbots. These AI systems can generate responses that are contextually relevant, fluent, and indistinguishable from human-written text. They can provide personalized customer support, answer queries, and engage in natural language conversations, improving user experiences and interactions.

Language Translation and Localization:

Undetectable AI-generated text can greatly improve language translation and localization services. AI models can generate translations that are more accurate, contextually appropriate, and fluent. This enables organizations to provide high-quality translations for various purposes, such as localization of software, websites, and marketing materials.

Personalized Content Recommendations:

AI-generated text can be used to personalize content recommendations for users. By analyzing user preferences, behavior, and historical data, AI models can generate text-based recommendations for articles, books, movies, and other content. These recommendations can enhance user engagement, satisfaction, and overall user experiences.

Creative Writing and Storytelling:

AI-generated text can assist and inspire creative writing and storytelling. Writers can use AI models to generate prompts, suggest plotlines, or simulate characters’ dialogue. This collaboration between human writers and AI-generated text can spark creativity, boost productivity, and offer new avenues for storytelling.

Natural Language Interfaces and Voice Assistants:

AI-generated text can enhance natural language interfaces and voice assistants. It enables devices and applications to generate voice responses that are human-like and natural-sounding. This enhances the user experience in voice-controlled applications, virtual assistants, and smart home devices.

Accessibility and Assistive Technologies:

AI-generated text can contribute to accessibility and assistive technologies. Text-to-speech systems powered by AI-generated text can convert written content into spoken words, aiding individuals with visual impairments or reading difficulties. This technology improves accessibility to information and enhances inclusivity.

Language Learning and Education:

AI-generated text can support language learning and education. AI models can generate exercises, quizzes, and interactive content that helps learners practice and enhance their language skills. Additionally, AI-generated text can create language learning resources, such as grammar explanations and vocabulary lists, to assist learners in their educational journeys.

These are just a few examples of the applications and use cases for undetectable AI-generated text. As the technology continues to advance, its impact will likely extend to other domains, enabling new possibilities and transforming industries in innovative ways. It is important to navigate these applications responsibly, considering the ethical implications and ensuring that the generated content aligns with legal and ethical standards.

Related: How to Make a Discord Channel NSFW

The Future of AI-Generated Text and Detection Methods: Anticipating Challenges and Advancements

The field of AI-generated text is rapidly evolving, and the future holds both exciting possibilities and challenges. As AI models become more sophisticated, generating text that is increasingly difficult to detect, it becomes essential to develop advanced detection methods to ensure transparency, trust, and ethical use. Let’s explore some key aspects related to the future of AI-generated text and detection methods.

Advancements in AI-Generated Text:

AI-generated text is expected to become even more indistinguishable from human-written content. As language models continue to improve, they will likely exhibit better coherence, context awareness, and understanding of nuances. The future might witness the development of AI models that can generate text with emotions, subjective perspectives, and even individual writing styles, making detection more challenging.

Evolving Detection Methods:

To counter the advancements in AI-generated text, detection methods will need to evolve and become more sophisticated. Researchers and technologists will focus on developing robust techniques to identify AI-generated content, even when it mimics human writing closely. This might involve leveraging advancements in natural language processing (NLP), machine learning, and deep learning to develop detection algorithms and tools.

Multi-modal and Contextual Analysis:

Future detection methods might incorporate multi-modal and contextual analysis to identify AI-generated text. By analyzing not only the textual content but also associated metadata, writing patterns, stylistic cues, and inconsistencies, detection systems can better differentiate between human and AI-generated text. Contextual analysis, such as assessing the coherence of content within broader contexts, can further aid in detection.

Collaboration and Knowledge Sharing:

Addressing the challenges of undetectable AI-generated text will require collaboration among researchers, industry experts, and policymakers. The sharing of knowledge, techniques, and datasets related to AI-generated text detection will be critical to stay ahead of evolving techniques used by malicious actors. Collaborative efforts can lead to the development of standardized detection frameworks and open-source tools that benefit the wider community.

Explainability and Interpretability:

As the complexity of AI-generated text increases, it becomes crucial to develop methods that can explain and interpret the decisions made by detection systems. Explainable AI (XAI) techniques will play a vital role in ensuring transparency and building trust. Users should be able to understand how a detection system identifies AI-generated text, enabling them to assess the reliability of the results.

Cat-and-Mouse Dynamics:

The future of AI-generated text and detection methods will likely involve a cat-and-mouse game between content generators and detection systems. As detection methods advance, content generators might adapt and develop new techniques to evade detection. This dynamic nature will require continuous innovation and vigilance to stay ahead of emerging threats and maintain the effectiveness of detection methods.

Ethical and Regulatory Considerations:

As AI-generated text becomes more sophisticated and undetectable, it is essential to address the ethical and regulatory considerations associated with its use. Policymakers will need to establish guidelines and regulations to ensure responsible deployment, disclosure, and labeling of AI-generated content. Ethical frameworks should be in place to govern its use and prevent malicious activities that exploit the technology.

The future of AI-generated text and detection methods will be shaped by ongoing research, technological advancements, and societal needs. By fostering collaboration, investing in research, and promoting responsible practices, we can navigate the challenges and harness the benefits of AI-generated text while upholding ethical standards and preserving trust in the information ecosystem.

Ethical Considerations and Regulations for AI-Generated Text: Balancing Innovation and Responsibility

The rise of AI-generated text brings about important ethical considerations and the need for appropriate regulations to ensure responsible use and mitigate potential harms. As AI models become more sophisticated and capable of generating indistinguishable text, it becomes imperative to address the following ethical considerations and establish regulatory frameworks:

Misinformation and Manipulation:

Undetectable AI-generated text has the potential to spread misinformation, manipulate public opinion, and deceive individuals. It is crucial to develop safeguards against the malicious use of AI-generated content to protect the integrity of information and prevent harm to individuals and society. Regulations should focus on promoting transparency, accountability, and clear disclosure of AI-generated text.

Consent and Privacy:

AI-generated text often involves analyzing and processing large amounts of data, including personal information. Respecting user privacy and obtaining informed consent for data usage is of utmost importance. Regulations should ensure that organizations collecting and using data to train AI models follow robust privacy practices and obtain explicit consent when generating text that may involve personal information.

Bias and Fairness:

AI models trained on biased or unrepresentative datasets can perpetuate existing biases in the generated text. It is essential to address bias and ensure fairness in AI-generated content, particularly in sensitive domains such as hiring, lending, and legal systems. Regulations should encourage organizations to evaluate and mitigate bias in AI models, promote diversity in training data, and regularly assess the impact of AI-generated content on different demographic groups.

Intellectual Property and Plagiarism:

AI-generated text raises questions regarding intellectual property rights and plagiarism. Regulations should provide clarity on ownership and attribution of AI-generated content. It is essential to strike a balance between encouraging innovation and protecting the rights of original creators. Organizations should have clear policies on the use and attribution of AI-generated text to respect intellectual property rights.

Deepfakes and Impersonation:

AI-generated text can be used to create deepfakes—fake content that appears authentic but is actually manipulated or synthesized. Regulations should address the risks associated with deepfakes, including the potential for identity theft, reputational harm, and privacy violations. Countermeasures, such as authentication mechanisms and watermarking, can be explored to detect and mitigate the impact of AI-generated deepfake text.

Transparency and Explainability:

Transparency and explainability are crucial for building trust in AI-generated text. Users should be aware when they are interacting with AI-generated content and have access to information about its origin. Regulations should encourage organizations to be transparent about the use of AI-generated text, provide explanations for automated decisions, and offer channels for users to seek clarifications or raise concerns.

Human Oversight and Accountability: oversight is essential to ensure responsible use of AI-generated text. Regulations should emphasize the importance of human-in-the-loop approaches, where human reviewers or subject matter experts provide guidance, review, and validation of AI-generated content. Organizations shouldimplement mechanisms to hold themselves accountable for the content generated by their AI models and establish processes for addressing concerns and feedback from users.

Continuous Monitoring and Auditing:

Regulations should encourage regular monitoring and auditing of AI-generated text systems to detect any potential biases, errors, or unintended consequences. Ongoing evaluation and assessment can help identify and rectify issues promptly and ensure that AI-generated content aligns with legal and ethical standards.

International Collaboration and Standards:

Given the global nature of AI-generated text, international collaboration and the development of common standards are crucial. Governments, organizations, and researchers should work together to establish ethical guidelines, share best practices, and harmonize regulations to ensure consistency and accountability across borders.

Public Awareness and Education:

Regulations should emphasize the importance of public awareness and education regarding AI-generated text. Efforts should be made to educate individuals about the capabilities and limitations of AI models, the potential risks associated with AI-generated content, and how to critically evaluate information in the digital age. Public awareness campaigns can empower individuals to make informed decisions and protect themselves from the potential harms of AI-generated text.

Ethical considerations and regulations for AI-generated text must strike a balance between fostering innovation and ensuring responsible use. As the technology evolves, it is crucial to continuously reassess and update regulations to adapt to emerging challenges and risks while maximizing the benefits of AI-generated text in an ethical and accountable manner.

Conclusion

While the concept of making AI-generated text undetectable may be intriguing, it is important to approach this topic with a strong emphasis on ethics and responsible use. The goal should not solely be to create text that is indistinguishable from human-written content but to utilize AI-generated text in ways that benefit society while maintaining trust and transparency. Here are some key considerations to keep in mind:

Prioritize Transparency: Instead of focusing solely on making AI-generated text undetectable, prioritize transparency by clearly disclosing when content is generated by AI. Users should have the right to know whether they are interacting with human-written or AI-generated content.

Responsible Use: Ensure that AI-generated text is used responsibly, adhering to ethical guidelines and legal frameworks. Organizations should have clear policies around the use of AI-generated text, respecting intellectual property rights, privacy, and avoiding misinformation or manipulation.

Detection and Accountability: Rather than solely striving for undetectability, work on developing robust detection methods to identify AI-generated text. Detection systems can help maintain accountability and prevent misuse, allowing for the identification of potential risks and addressing them promptly.

Collaboration and Research: Foster collaboration among researchers, industry experts, and policymakers to develop and share detection methods, techniques, and datasets. By working together, we can stay ahead of emerging challenges and ensure the continuous improvement of detection systems.

User Education: Promote public awareness and education about AI-generated text. Help users understand the capabilities and limitations of AI models, encourage critical thinking, and provide resources for evaluating the authenticity and reliability of information they encounter.

Ethical Frameworks and Regulation: Establish ethical frameworks and regulations that govern the use of AI-generated text. These frameworks should address issues such as bias, privacy, consent, and the responsible deployment of AI models.

Continuous Improvement: Embrace a mindset of continuous improvement in the development and deployment of AI-generated text systems. Regularly assess the impact of AI-generated text, refine detection methods, and adapt ethical guidelines and regulations to address emerging challenges and societal needs.

By focusing on these considerations, we can strive for the responsible use of AI-generated text, maintaining transparency, accountability, and trust. While the idea of undetectability may be alluring, it is crucial to prioritize the ethical implications and ensure that AI-generated text is used in a manner that benefits individuals and society as a whole.

FAQs

Q1: How can I make AI-generated text undetectable?

A1: While it is not advisable to make AI-generated text undetectable, there are techniques that can make it more difficult to discern from human-written content. However, prioritizing transparency and responsible use of AI-generated text is crucial to maintain trust and ethical standards.

Q2: Are there any ethical concerns with making AI-generated text undetectable?

A2: Yes, there are significant ethical concerns associated with making AI-generated text undetectable. Undetectable AI-generated text can be used to spread misinformation, manipulate public opinion, and deceive individuals. Prioritizing transparency and accountability is vital to mitigate these risks and ensure responsible use of AI-generated text.

Q3: Can undetectable AI-generated text be used for malicious purposes?

A3: Yes, undetectable AI-generated text can be exploited for malicious purposes such as creating convincing phishing emails, spreading fake news, or impersonating individuals. This highlights the importance of developing robust detection methods and implementing regulations to prevent misuse and protect individuals and society from potential harm.

Q4: What are the alternatives to making AI-generated text undetectable?

A4: Instead of focusing on making AI-generated text undetectable, it is advisable to prioritize transparency and responsible use. Clearly disclosing when content is generated by AI, implementing detection systems to identify AI-generated text, and adhering to ethical guidelines and regulations can help ensure the accountable and ethical use of AI-generated text.