How to Use Code Llama

In the world of programming, the ability to generate code with ease and efficiency is highly valued. This is where Code Llama comes into play. Code Llama, developed by MetaAI, is a powerful AI model designed to assist developers in various code-related tasks. Whether you need help with code completion, code generation, code testing, or code explanation, Code Llama has got you covered.

In this comprehensive guide, we will explore the different ways to use Code Llama, its capabilities, and how it compares to other AI programming tools. We will also provide step-by-step instructions on setting up Code Llama and demonstrate its practical applications through examples.

Setting Up Code Llama

Before we dive into the various ways to use Code Llama, let’s start by setting it up. There are multiple ways to access Code Llama’s capabilities, both locally and through hosted services. One of the easiest ways to get started is by using the Hugging Face platform, which integrates Code Llama within the transformers framework.

To begin, make sure you have the latest version of the transformers package installed. You can do this by running the following command:

pip install git+https://github.com/huggingface/transformers.git@main accelerate

Once the package is installed, you can execute the introductory script provided by Hugging Face. This script loads the 7b-hf model, which is tailored for code completion tasks. It initiates a Python function and prompts the model to complete the code based on the function name. Here’s an example:

from transformers import AutoTokenizer

import transformers

import torch

tokenizer = AutoTokenizer.from_pretrained("codellama/CodeLlama-7b-hf")

pipeline = transformers.pipeline(

"text-generation",

model="codellama/CodeLlama-7b-hf",

torch_dtype=torch.float16,

device_map="auto",

)

sequences = pipeline(

'def fibonacci(',

do_sample=True,

temperature=0.2,

top_p=0.9,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=100,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

This script demonstrates how Code Llama can complete code based on a given prompt. In this case, we prompt the model to complete the Fibonacci function. You can modify the prompt and experiment with different code completions.

Accessing Code Llama through Hugging Face

Hugging Face provides a user-friendly platform for accessing Code Llama’s capabilities. You can find Code Llama models on the Hugging Face Hub, along with their model cards and licenses. The Hub also offers integration with Text Generation Inference, allowing for fast and efficient production-ready inference.

If you want to experiment with Code Llama models without installing anything locally, you can utilize the Code Llama Playground available on the Hugging Face website. The playground allows you to generate both text and code using the Code Llama model.

Chatting with Code Llama

One of the exciting aspects of Code Llama is its chat version, which enables you to have interactive conversations with the model. This can be immensely helpful in scenarios where you need assistance with code-related tasks or explanations.

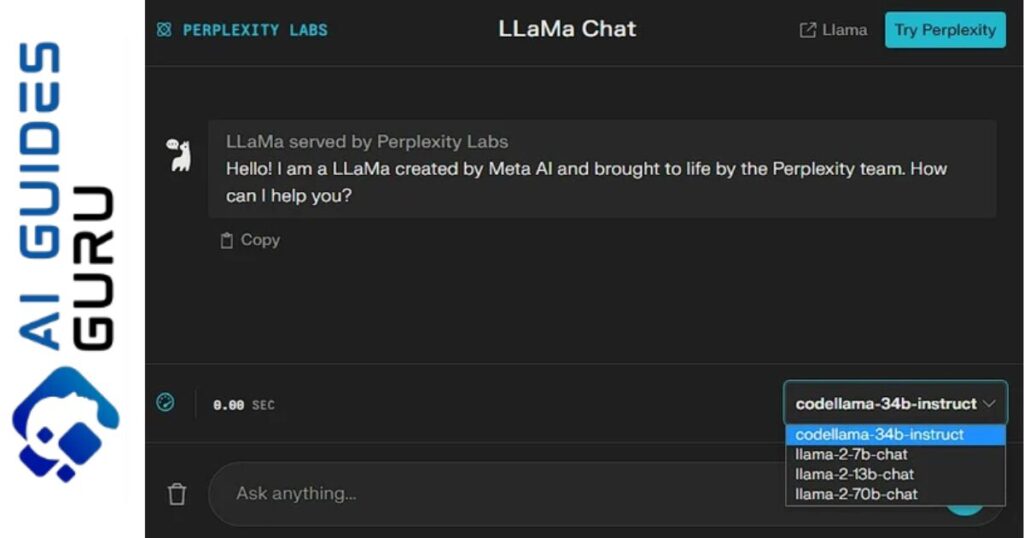

Perplexity AI and Faraday are two platforms that have integrated the Code Llama chat models. Perplexity AI offers Llama Chat, a conversational search engine powered by Code Llama’s 34b-instruct model. Simply navigate to the website to start a chat and ask Code Llama for code generation or clarification.

Faraday, on the other hand, is an easy-to-use desktop app that allows you to chat with AI “characters” offline. It supports the 7b, 13b, and 34b Code Llama instruct models and provides a seamless experience for code-related conversations.

Leveraging Code Llama Inside Your IDE

If you’re a developer and prefer to use Code Llama directly within your integrated development environment (IDE), there are several options available.

CodeGPT + Ollama

Ollama is a library of Code Llama that you can download and integrate into your IDE. It allows you to use large language models locally, including the 7B instruct model. By installing Ollama and following the instructions, you can leverage Code Llama as a copilot in your IDE.

Continue + Ollama / TogetherAI / Replicate

The Continue VS Code extension offers another way to use Code Llama within your IDE. You can run Code Llama as a drop-in replacement for GPT-4 by using Ollama, TogetherAI, or Replicate. The extension provides step-by-step instructions for installation and usage.

It’s worth mentioning that Hugging Face is continuously updating their VSCode extension to support Code Llama, so keep an eye out for updates.

Comparing Code Llama to Other AI Programming Tools

Code Llama is undoubtedly a powerful AI programming tool, but how does it compare to other similar tools in the market? Let’s take a closer look at some statistics comparing Code Llama to GitHub CoPilot and ChatGPT.

| Tool | Developer | Training Data | Supported Programming Languages | Model Sizes | Prompt Sizing | Context Window | Code Location |

|---|---|---|---|---|---|---|---|

| GitHub CoPilot | Individuals + Businesses | Natural language + 100GB of GitHub Code | Most all Languages | Most all Languages | 1024 | 8k | Cloud-based |

| ChatGPT | OpenAI | 100GB of code snippets | Most all Languages | Most all Languages | 1024 | 100k | Local |

| Code Llama | MetaAI | 500 billion tokens of code data | Python, C++, Java, PHP, C#, Typescript, Bash | 7B, 13B, 34B | 4096 | 16k | Local |

As you can see from the comparison table, Code Llama offers broad language support, including popular programming languages like Python, Java, and C++. It also provides variations of the model tailored to different use cases and sizes, ranging from 7B to 34B parameters.

Practical Applications of Code Llama

Now that we have explored the different ways to use Code Llama and compared it to other AI programming tools, let’s dive into some practical applications of Code Llama. Here are a few examples:

Code Completion

Code Llama can be a valuable tool for code completion tasks. By providing a partial code snippet or function, you can prompt Code Llama to generate the complete code. For example, if you have a Python class that requires a binary sorting algorithm, you can ask Code Llama to generate the code for it. Here’s an example prompt and response:

Prompt:

I have a Python class that would benefit from a binary sorting algorithm. Could you please create a Python class that takes in a list of values, sorts them using binary sort, and returns the sorted list?

Response:

class BinarySort:

def __init__(self, arr):

self.arr = arr

def sort(self):

for i in range(1, len(self.arr)):

key = self.arr[i]

left, right = 0, i - 1

while left <= right:

mid = (left + right) // 2

if self.arr[mid] < key:

left = mid + 1

else:

right = mid - 1

self.arr[left + 1:i + 1] = self.arr[left:i]

self.arr[left] = key

return self.arr

Code Optimization

Code Llama can also assist with code optimization, helping you improve the efficiency and performance of your code. For example, if you have a piece of code that sorts an array using nested loops, Code Llama can suggest a more optimized approach using built-in functions like sort(). Here’s an example:

Original Code:

arr = [5, 2, 8, 7, 1]

temp = 0

for i in range(0, len(arr)):

for j in range(i+1, len(arr)):

if arr[i] > arr[j]:

temp = arr[i]

arr[i] = arr[j]

arr[j] = temp

Optimized Code (suggested by Code Llama):

arr = [5, 2, 8, 7, 1]

arr.sort()

Code Testing

Code Llama can generate test cases for your code, making it easier to test and validate your implementations. For example, if you have a function that calculates the factorial of a number, you can ask Code Llama to generate test cases to verify its correctness. Here’s an example:

Code:

def factorial(n):

if n == 0 or n == 1:

return 1

else:

return n * factorial(n-1)

Generated Test Cases (suggested by Code Llama):

assert factorial(0) == 1

assert factorial(1) == 1

assert factorial(5) == 120

assert factorial(10) == 3628800

These are just a few examples of how Code Llama can be used in various coding scenarios. Its versatility and ability to understand natural language prompts make it a valuable tool for both beginners and experienced developers.

Conclusion

In conclusion, Code Llama is a powerful AI programming tool that can greatly enhance your coding experience. Whether you need assistance with code completion, code generation, code optimization, or code testing, Code Llama has the capabilities to help you streamline your development process.

In this guide, we explored the different ways to use Code Llama, from setting it up locally to accessing it through the Hugging Face platform. We also discussed practical applications of Code Llama, such as code completion, code optimization, and code testing.

Code Llama’s broad language support, large parameter variations, and its ability to understand natural language prompts set it apart from other AI programming tools in the market. By incorporating Code Llama into your development workflow, you can save time, improve code quality, and enhance your overall productivity.

So, why not give Code Llama a try and experience its power for yourself? Happy coding!

Is Code Llama available for all programming language

Code Llama supports various programming languages, including Python, C++, Java, PHP, C#, Typescript, and Bash. However, the level of support may vary depending on the specific language and model variant. It’s best to refer to the documentation or model cards for more information.

Can I use Code Llama offline?

Yes, Code Llama can be used offline by integrating it into your IDE or using desktop applications like Faraday. These options allow you to leverage Code Llama’s capabilities without relying on an internet connection.

Is Code Llama suitable for beginners?

Yes, Code Llama is suitable for both beginners and experienced developers. Its ability to understand natural language prompts makes it accessible and user-friendly. Beginners can use Code Llama to generate code from natural language instructions, while experienced developers can leverage its advanced features to streamline their coding tasks.