How to Train GPT4 All

Training large language models such as GPT (Generative Pre-trained Transformer) has revolutionized natural language processing tasks. While pre-training on massive amounts of data enables these models to learn general language patterns, fine-tuning with specific data can further enhance their performance on specialized tasks. In this comprehensive guide, we will explore the process of training GPT4All with customized local data, providing step-by-step instructions, and highlighting the benefits and considerations involved.

Understanding GPT4All

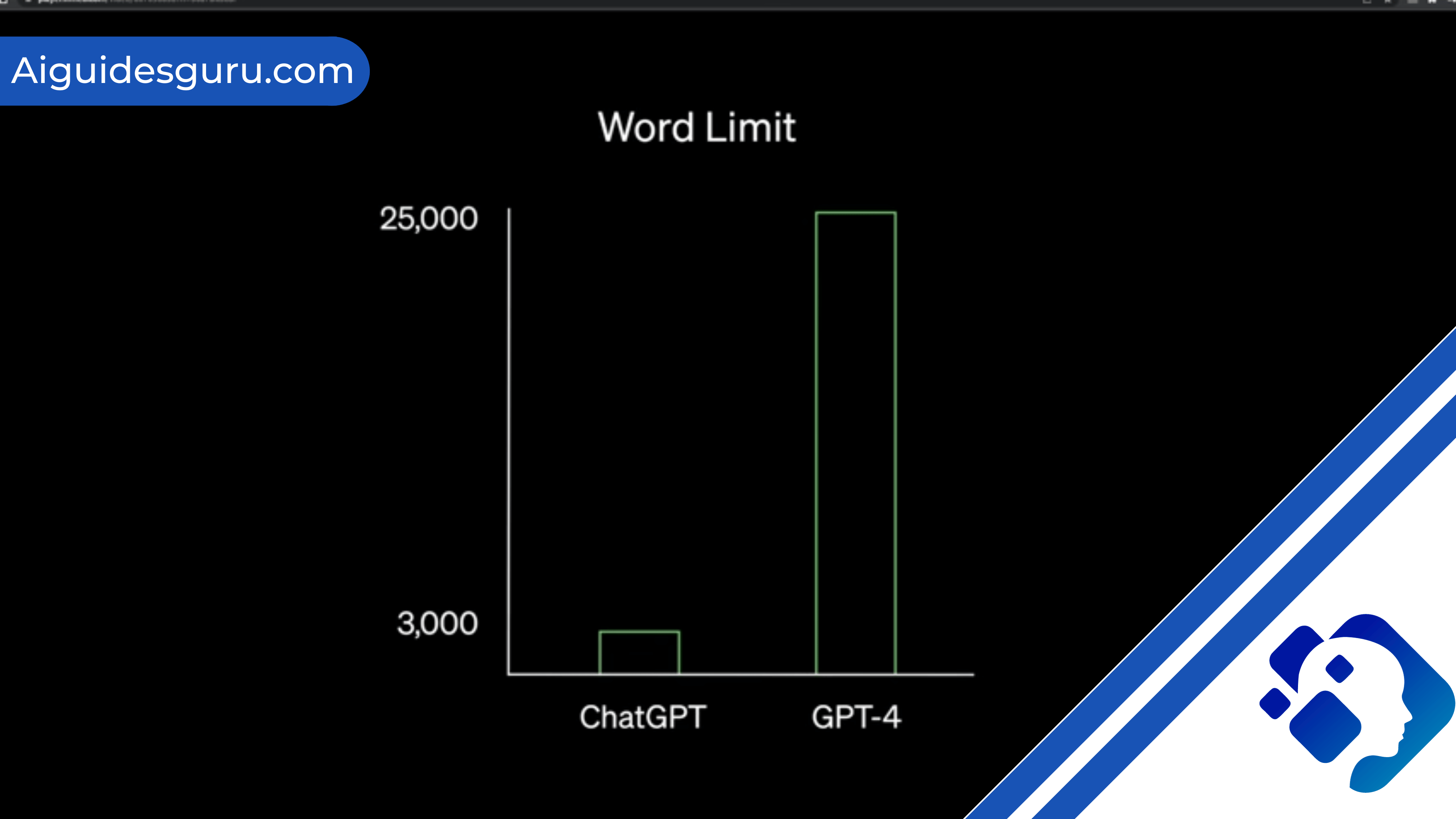

GPT4All is an open-source ecosystem developed by Nomic AI, an information cartography company. It allows for the integration of Language Model Models (LLMs) into applications without the need for paid platforms or hardware subscriptions. The goal of GPT4All is to improve access to AI resources, making it accessible to smaller businesses, organizations, and independent researchers. Unlike ChatGPT, GPT4All can be run locally on modern computers without an internet connection or GPU.

Related:How to Use ChatGPT API with Python

Benefits of Training GPT4All

Training GPT4All with customized local data offers several advantages over using ChatGPT or other pre-trained models. Here are some key benefits:

- Portability: GPT4All models require minimal memory storage and do not rely on GPUs. They can be easily saved on a USB flash drive, making them highly portable and usable on any modern computer.

- Privacy and Security: Unlike ChatGPT, which stores conversations and requires an internet connection, GPT4All prioritizes data transparency and privacy. Your data remains on your local hardware unless you choose to share it with GPT4All for model improvement purposes.

- Offline Mode: GPT4All does not rely on constant internet connectivity or API access. Since the data is stored locally, you can continue using GPT4All even without an internet connection.

- Free and Open Source: Many of the LLMs provided by GPT4All are licensed under GPL-2, allowing for fine-tuning and integration of models without incurring additional licensing costs.

Preparing Customized Local Data

Before diving into the training process, it is essential to prepare your customized local data. The quality and relevance of your data will greatly impact the performance of the trained GPT4All model. Here are some steps to consider:

- Data Collection: Identify and gather relevant data for your specific task or application. This can include domain-specific documents, text corpora, or any other data that aligns with your training objectives.

- Data Cleaning: Clean the collected data to remove any noise, inconsistencies, or irrelevant information. Ensure that the data is in a standardized format and free from any biases that may affect the model’s performance.

- Data Formatting: Structure the data in a way that suits the input requirements of GPT4All. This may involve organizing the data into prompts and completions or any other format that aligns with the training methodology.

- Data Split: Divide the dataset into training, validation, and test sets. The training set will be used to train the model, the validation set to monitor its performance, and the test set to evaluate the final trained model’s effectiveness.

Fine-tuning GPT4All with Local Data

Now that your customized local data is prepared, you can proceed with the fine-tuning process. Fine-tuning GPT4All involves training the model on your specific dataset, allowing it to learn from the domain-specific knowledge contained within the data. Follow these steps to fine-tune GPT4All:

- Install GPT4All: Download and install the GPT4All package on your computer using the provided one-click installer. The installer is available for Windows, macOS, and Linux operating systems.

- Model Selection: Choose the GPT4All model that best suits your training objectives. Consider factors such as model size, response speed, and licensing restrictions. Refer to the provided table in the GPT4All documentation for an overview of available models and their characteristics.

- Configure Training Parameters: Create a YAML configuration file specifying the training parameters for GPT4All. This includes model and tokenizer names, batch size, learning rate, evaluation steps, and other relevant settings. Customize the configuration file to align with your specific training requirements.

- Load and Preprocess Data: Load your prepared dataset using the Huggingface datasets package. This package provides a unified API for working with datasets in NLP tasks. Preprocess the data to match the input format expected by GPT4All.

- Train the Model: Utilize the accelerated training capabilities of GPT4All to train the model. Launch the training process using the provided command in the terminal, specifying the configuration file and the desired number of processes and machines.

- Monitor and Evaluate: Monitor the training progress and evaluate the model’s performance using the validation set. Adjust the training parameters if necessary to optimize the model’s performance.

- Save and Use the Trained Model: Once the training is complete, save the trained GPT4All model for future use. You can now integrate the model into your applications or utilize it for specific tasks, benefiting from its domain-specific knowledge.

The Huggingface Datasets Package

The Huggingface datasets package plays a crucial role in the training process of GPT4All. This open-source library, developed by Hugging Face, simplifies working with datasets in NLP tasks. It provides a comprehensive collection of datasets, including benchmarks, corpora, and specialized datasets. The package offers a unified API for easy access, manipulation, and preprocessing of data. The Huggingface datasets package is a valuable resource for preparing and loading customized local data for training GPT4All.

Challenges and Considerations

Training GPT4All with customized local data may pose certain challenges and considerations. Here are a few factors to keep in mind:

- Data Quality: The quality and relevance of your data greatly influence the performance of the trained model. Ensure that your data is clean, consistent, and free from biases that may impact the model’s accuracy.

- Training Time: Fine-tuning GPT4All can be a time-consuming process, especially with larger datasets. Consider the computational resources available and plan accordingly to avoid excessive training times.

- Hyperparameter Tuning: Experimenting with different hyperparameter values may be necessary to optimize the model’s performance. Adjusting parameters such as learning rate, batch size, and evaluation steps can significantly impact the final results.

- Hardware Requirements: While GPT4All can run on relatively modern computers without GPUs, larger models may require more memory and computational resources. Ensure that your hardware meets the minimum requirements for efficient training.

Step-by-Step Guide to Training GPT4All

To help you navigate the training process, here is a step-by-step guide to training GPT4All with customized local data:

- Step 1: Install GPT4All: Download and install GPT4All using the provided one-click installer for your operating system.

- Step 2: Prepare Local Data: Collect, clean, format, and split your customized local data into training, validation, and test sets.

- Step 3: Choose a GPT4All Model: Select the GPT4All model that aligns with your training objectives, considering factors such as model size, response speed, and licensing restrictions.

- Step 4: Configure Training Parameters: Create a YAML configuration file specifying the training parameters, including model and tokenizer names, batch size, learning rate, and evaluation steps.

- Step 5: Load and Preprocess Data: Utilize the Huggingface datasets package to load and preprocess your local data, ensuring it matches the input format expected by GPT4All.

- Step 6: Train the Model: Launch the training process using the provided terminal command, specifying the configuration file and the desired number of processes and machines.

- Step 7: Monitor and Evaluate: Monitor the training progress and evaluate the model’s performance using the validation set. Adjust training parameters if necessary.

- Step 8: Save and Use the Trained Model: Once training is complete, save the trained GPT4All model for integration into applications or specific tasks.

Related: How Many Questions Can You Ask ChatGPT in an Hour?

Troubleshooting Tips

During the training process, you may encounter certain issues or challenges. Here are some troubleshooting tips to help you overcome common obstacles:

- Insufficient Memory: If you encounter memory errors, consider reducing the batch size or using a model with smaller memory requirements.

- Slow Training: Training times can vary depending on the dataset size and model complexity. If training is taking too long, consider reducing the dataset size or optimizing the training parameters.

- Inaccurate Results: If the trained model produces inaccurate or nonsensical outputs, review your data quality, preprocessing steps, and hyperparameter values. Experiment with different configurations to improve the model’s performance.

- Hardware Compatibility: Ensure that your hardware meets the minimum requirements for training GPT4All. Check the documentation for specific hardware recommendations and compatibility information.

Conclusion

Training GPT4All with customized local data offers significant advantages in terms of portability, privacy, and domain-specific knowledge. By following the step-by-step guide and leveraging the Huggingface datasets package, you can fine-tune GPT4All to meet your specific requirements. Keep in mind the challenges and considerations involved, and adjust training parameters as needed to optimize the model’s performance. With GPT4All, you can harness the power of language models for your applications and unlock new possibilities in natural language processing.

FAQs

Q1. Can I train GPT4All models with my own local data?

Yes, GPT4All allows you to train models with your customized local data. By following the provided guidelines and utilizing the Huggingface datasets package, you can fine-tune GPT4All to learn from your specific domain knowledge.

Q2. How can I ensure the quality of my training data?

To ensure data quality, it is crucial to clean and preprocess your local data before training. Remove any noise, inconsistencies, or biases that may impact the model’s accuracy. Additionally, validate the data against ground truth or expert knowledge to identify any potential issues.

Q3. What are the hardware requirements for training GPT4All?

GPT4All can run on relatively modern computers without GPUs. However, larger models may require more memory and computational resources. Ensure that your hardware meets the minimum requirements specified in the GPT4All documentation.

Q4. Can I fine-tune GPT4All models for commercial use?

Several LLMs provided by GPT4All are licensed under GPL-2, allowing for commercial use without additional licensing fees. However, it is essential to review the licensing terms for each specific model to ensure compliance.

Q5. How long does it take to train a GPT4All model?

The training time for GPT4All models can vary depending on factors such as dataset size, model complexity, and hardware resources. Larger datasets and more complex models may require more time for training. It is advisable to plan accordingly and allocate sufficient time for the training process.

Q6. Can I fine-tune GPT4All models with GPUs?

GPT4All models do not require GPUs for training. They can be trained on relatively modern computers without the need for specialized hardware. However, if you have access to GPUs, they can potentially accelerate the training process.

Q7. Does GPT4All support offline mode?

Yes, GPT4All can be used offline once the models and data are downloaded and saved locally. You do not require a constant internet connection to access and utilize the trained GPT4All models.

Q8. Can I contribute to the development of GPT4All?

As an open-source ecosystem, GPT4All welcomes contributions from the community. You can participate in the development, improvement, and fine-tuning of existing models or even create your own models for integration into GPT4All.

Q9. Can I use GPT4All for languages other than English?

Yes, GPT4All supports multiple languages. You can fine-tune GPT4All models with localized data and leverage its language generation capabilities for languages other than English.

Q10. Can I use GPT4All for tasks other than natural language processing?

While GPT4All is primarily designed for natural language processing tasks, its flexible architecture allows for potential application in other domains. Experimentation and adaptation may be required to utilize GPT4All for tasks beyond traditional NLP applications.