How To Use Whisper API

In today’s fast-paced world, voice technology has become an integral part of our lives. From virtual assistants to voice-controlled devices, the ability to interact with technology through speech has revolutionized the way we communicate and engage with digital platforms. One powerful tool that enables developers to harness the potential of voice is the Whisper API. In this article, we will explore what the Whisper API is, its key features, and how you can leverage it to enhance your applications and provide a seamless voice experience to your users.

Understanding the Whisper API:

- Understanding the Whisper API:

- How to Access the Whisper API?

- How to Find Your Secret API Key for the Whisper API in Your OpenAI Account

- Understanding APIs and the Whisper API

-

Getting Started with the Whisper API

- Sign up for an OpenAI account:

- Understand the pricing and usage:

- Review the documentation:

- Obtain your API key:

- Choose your API client or programming language:

- Set up your development environment:

- Make your first API request:

- Handle the API response:

- Iterate and optimize:

- Ensure error handling and scalability:

-

Exploring the Whisper API Documentation

- Access the documentation:

- Understand the overview:

- Learn about API endpoints and request formats:

- Explore example requests and responses:

- Discover input and output options:

- Understand voice options:

- Review usage guidelines and best practices:

- Check for code samples and SDKs:

- Explore advanced features:

- Stay updated:

-

Best Practices for Using the Whisper API

- Understand usage limits and pricing:

- Test and iterate with small requests:

- Leverage voice customization:

- Consider appropriate pauses and pacing:

- Handle text preprocessing:

- Implement error handling and retries:

- Monitor and analyze synthesized speech quality:

- Respect user privacy and data protection:

- Stay updated with API changes:

- Seek community support and share feedback:

- Benefits of Using the Whisper API

- Conclusions

- FAQ

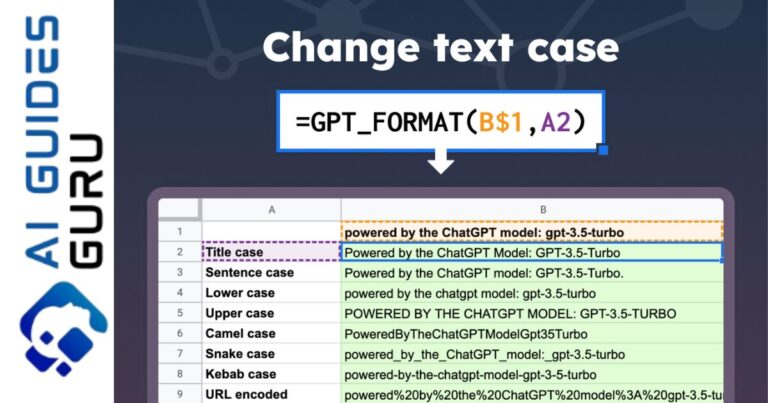

The Whisper API, developed by OpenAI, is a cutting-edge speech synthesis system that allows developers to generate high-quality, natural-sounding voices. With Whisper, you can convert written text into spoken words, opening up exciting possibilities for voice-enabled applications, audiobooks, voice assistants, and more. By leveraging the power of machine learning, the Whisper API can mimic human-like speech patterns, intonations, and emotions, providing a more immersive and engaging experience for your users.

Key Features of the Whisper API:

The Whisper API offers a range of powerful features that make it a valuable asset for developers seeking to incorporate voice technology into their applications. Firstly, it provides a diverse set of voices to choose from, allowing you to customize the tone and style of speech to align with your application’s personality and target audience. Whether you’re aiming for a professional, conversational, or even a character-based voice, the Whisper API has you covered.

Additionally, the Whisper API supports dynamic control over speech parameters, enabling developers to fine-tune aspects such as speaking rate, pitch, and volume. This level of customization ensures that the generated voices align precisely with your application’s requirements, delivering an optimal user experience.

Integrating the Whisper API into Your Applications:

Integrating the Whisper API into your applications is a straightforward process. OpenAI provides comprehensive documentation and user-friendly guides, making it easy for developers to get started. The API offers a simple and intuitive interface, allowing you to send text inputs and receive synthesized speech outputs in a matter of seconds.

To begin, you’ll need an OpenAI API key, which you can obtain by signing up on the OpenAI website. Once you have your API key, you can make requests to the Whisper API endpoint, passing the desired text for synthesis. The API will respond with the generated audio, which you can then integrate into your applications using your preferred programming language or framework.

Unlocking the Potential of Voice with the Whisper API:

The Whisper API opens up a world of possibilities for developers looking to leverage voice technology. You can enhance your applications by adding natural-sounding voiceovers to tutorials, interactive voice responses to customer support systems, or even create unique virtual characters that engage users with lifelike speech. By incorporating the Whisper API, you can create a more immersive and accessible experience for your users, fostering increased engagement and satisfaction.

In this article, we introduced the Whisper API and explored how it empowers developers to harness the power of voice in their applications. We discussed its key features and highlighted the ease of integration. By incorporating the Whisper API into your projects, you can unlock the potential of voice technology and provide your users with a more engaging and interactive experience. So, why wait? Dive into the world of voice-enabled applications and bring your ideas to life with the Whisper API.

Related: How To Write An AI Character

How to Access the Whisper API?

Accessing the Whisper API and integrating it into your applications is a straightforward process. Here, we will guide you through the necessary steps to get started:

Sign up for an OpenAI account:

To access the Whisper API, you’ll need to sign up for an account on the OpenAI platform. Visit the OpenAI website and follow the registration process. Once you have an account, you’ll be able to obtain an API key, which you’ll use to authenticate your requests.

Obtain your API key:

After signing up and logging into your OpenAI account, navigate to the API section. Here, you’ll find information on how to generate your API key. It’s important to keep your API key secure, as it grants access to your OpenAI resources and usage.

Review the documentation:

OpenAI provides comprehensive documentation and resources to guide you through the process of using the Whisper API. Take some time to familiarize yourself with the documentation, as it contains detailed information on the API endpoints, request formats, and response structures.

Make API requests:

Once you have your API key and are familiar with the documentation, you can start making requests to the Whisper API. Construct a POST request to the API endpoint, providing the necessary parameters such as the text you want to synthesize and any additional options you wish to customize. Send the request using your preferred programming language or API client.

Handle the API response:

Upon making a request to the Whisper API, you will receive a response containing the synthesized audio in the desired voice and format. Depending on your application’s requirements, you can choose to save the audio as a file, stream it directly to your users, or integrate it into other parts of your application.

Implement error handling and scalability:

As with any API integration, it’s important to implement proper error handling mechanisms to gracefully handle any unexpected issues. Additionally, consider scalability and performance optimizations to ensure that your application can handle increased traffic and usage.

Test and iterate:

Once you have integrated the Whisper API into your application, thoroughly test its functionality and user experience. Gather feedback from users and iterate on your implementation to enhance the voice experience and address any potential issues.

By following these steps, you can successfully access and utilize the power of the Whisper API in your applications, allowing you to create rich, voice-enabled experiences for your users. OpenAI’s documentation and developer resources will be your go-to references as you explore the various features and possibilities of the Whisper API. So, get started today and unlock the true potential of voice technology in your applications!

How to Find Your Secret API Key for the Whisper API in Your OpenAI Account

To access the Whisper API and make requests, you’ll need to obtain your secret API key from your OpenAI account. Here’s a step-by-step guide on how to find your API key:

Sign in to your OpenAI account:

Visit the OpenAI website and sign in using your registered credentials. If you don’t have an account yet, you’ll need to sign up and create one.

Once you’re logged in, navigate to the API section of your OpenAI account. You can usually find this in the account dashboard or by accessing the API menu.

Create a new API project:

If you haven’t created an API project yet, you’ll need to set one up. Click on the “Create New Project” button or a similar option to create a new project specifically for the Whisper API.

Generate your API key:

Within your API project, you should find an option to generate an API key. Click on the relevant button or link to generate your secret API key. Make sure to keep this key secure, as it grants access to your OpenAI resources and usage.

Copy your API key:

Once your API key is generated, copy it to your clipboard or save it in a secure location. It will be a long string of characters and numbers that uniquely identifies your account and authorizes API requests.

Store your API key securely:

It’s crucial to store your API key securely to prevent unauthorized access to your OpenAI resources. Avoid hardcoding the key directly into your application’s source code. Instead, consider using environment variables or a configuration file that is excluded from version control. This practice ensures that your API key remains private and can be easily updated if necessary.

Use your API key for authentication:

In your application, when making requests to the Whisper API, include your API key as part of the authentication process. Most API clients or programming languages provide a way to pass the API key securely in the request headers or as a parameter.

By following these steps, you can easily find and retrieve your secret API key for the Whisper API from your OpenAI account. Remember to handle your API key with care and keep it secure to protect your account and resources. With your key in hand, you can begin integrating the Whisper API into your applications and unlock the power of voice technology.

Understanding APIs and the Whisper API

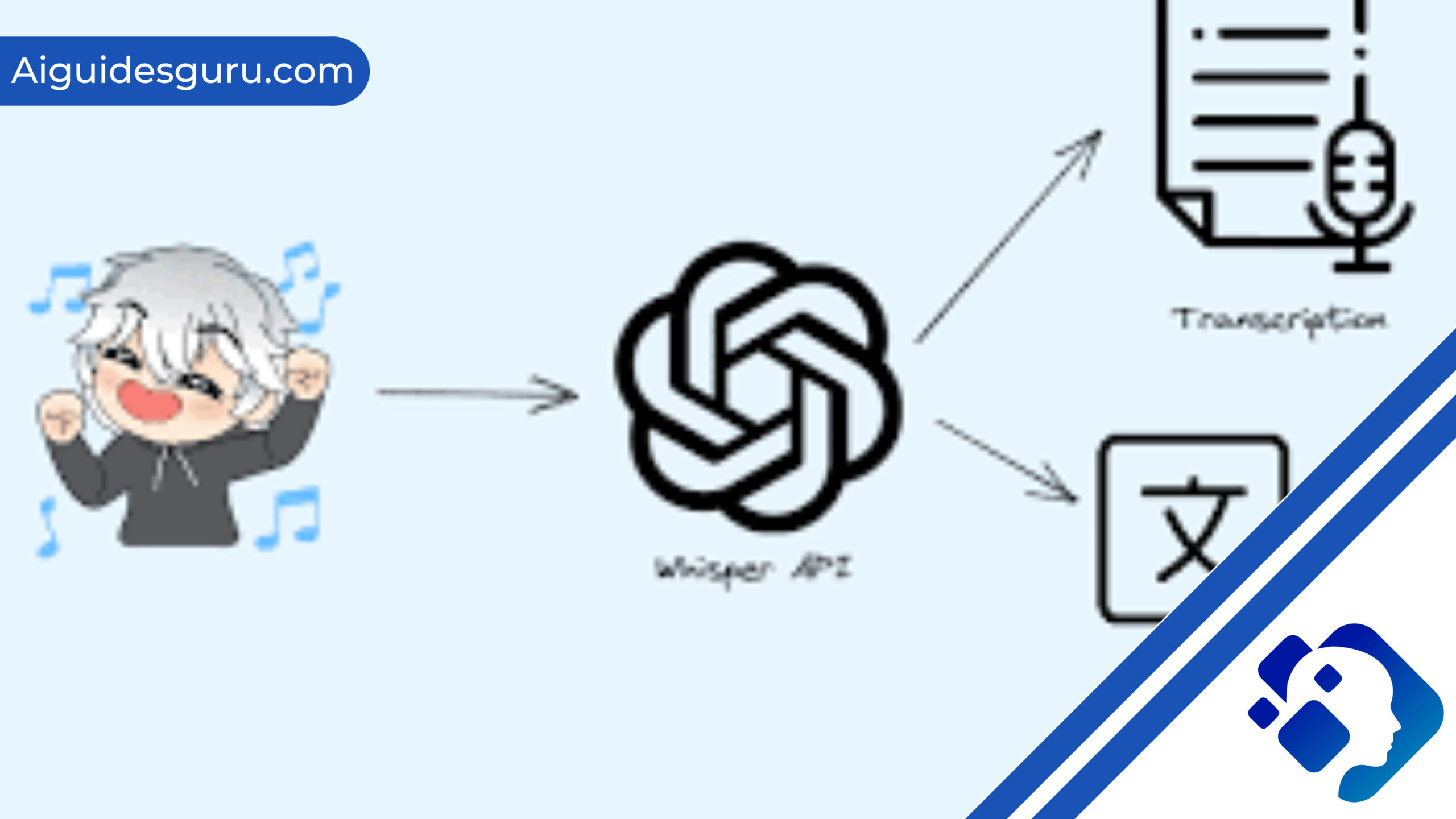

APIs (Application Programming Interfaces) serve as a crucial bridge between software applications, enabling them to communicate and interact with each other seamlessly. In this section, we will delve into the concept of APIs and specifically explore the Whisper API, its purpose, and its significance in the realm of voice technology.

What is an API?

An API acts as an intermediary layer that allows different software systems to interact and exchange data. It defines a set of rules and protocols that govern how applications can communicate with each other. APIs enable developers to leverage the functionality of existing software components, services, or platforms without having to build everything from scratch. By providing a standardized interface, APIs facilitate integration and interoperability, making it easier to create powerful, interconnected applications.

Introducing the Whisper API:

The Whisper API, developed by OpenAI, is an API specifically designed for speech synthesis. It utilizes advanced machine learning techniques to generate natural-sounding human speech from written text. The Whisper API enables developers to incorporate high-quality, customizable voice capabilities into their applications, opening up a world of possibilities for voice-enabled experiences.

Key Features of the Whisper API:

The Whisper API offers several key features that make it a valuable tool for developers:

a. Natural-sounding voices: The Whisper API provides a diverse range of voices to choose from, allowing developers to select voices that suit their application’s requirements. These voices can mimic human-like speech patterns, intonations, and emotions, resulting in a more engaging and immersive experience for users.

b. Customization options: The Whisper API allows developers to fine-tune speech parameters such as speaking rate, pitch, and volume. This level of customization ensures that the generated voices align precisely with the desired application experience, enhancing user satisfaction.

c. Multilingual support: The Whisper API supports multiple languages, enabling developers to create voice-enabled applications for a global audience. Whether it’s English, Spanish, French, or other supported languages, the Whisper API can generate synthesized speech in various languages.

Use Cases for the Whisper API:

The Whisper API can be applied across a wide range of use cases, including but not limited to:

a. Voice assistants: Developers can integrate the Whisper API into voice assistant applications, enabling them to respond to user queries and commands using natural-sounding voices.

b. Audiobooks and podcasts: The Whisper API opens up opportunities for converting written content into spoken audio, making it easier to create audiobooks, podcasts, and other spoken-word content.

c. Accessibility features: By utilizing the Whisper API, developers can enhance accessibility in their applications by providing speech-based interfaces for individuals with visual impairments or reading difficulties.

d. Interactive applications: The Whisper API can be used to create interactive applications, where synthesized voices engage users in conversations, tutorials, or game narratives.

Related: How Accurate Is AI

Getting Started with the Whisper API

If you’re eager to get started with the Whisper API and explore the capabilities of speech synthesis, follow these steps to begin integrating it into your applications:

Sign up for an OpenAI account:

To access the Whisper API, you’ll need to create an account on the OpenAI platform. Visit the OpenAI website and follow the registration process to create your account.

Understand the pricing and usage:

Familiarize yourself with the pricing and usage details of the Whisper API. OpenAI offers various pricing plans and options, so make sure you understand the costs associated with your usage.

Review the documentation:

OpenAI provides comprehensive documentation for the Whisper API, including guides, reference materials, and examples. Take the time to read through the documentation to understand the API’s capabilities, endpoints, request parameters, and response formats. This will help you better utilize the API in your applications.

Obtain your API key:

Generate your API key within your OpenAI account. The API key is a unique identifier that authorizes your access to the Whisper API. Keep your API key secure and do not expose it publicly.

Choose your API client or programming language:

Decide on the programming language or API client you want to use to interact with the Whisper API. You can choose from a wide range of languages and libraries that support making HTTP requests.

Set up your development environment:

Install any necessary dependencies or libraries required for your chosen programming language or API client. This may include HTTP libraries, JSON parsing libraries, or other tools needed to make API requests.

Make your first API request:

Construct an HTTP POST request to the Whisper API endpoint using your chosen programming language or API client. Include your API key in the headers or as an authentication parameter. Provide the necessary input text and any optional parameters for voice selection or customization.

Handle the API response:

Upon making a request to the Whisper API, you will receive a response containing the synthesized audio in the desired voice and format. Handle the response in your application as per your requirements. You may choose to save the audio as a file, stream it to users, or process it further.

Iterate and optimize:

Test and iterate on your implementation to refine the voice experience and address any issues or limitations. Experiment with different input texts, voice options, and customization parameters to find the best settings for your application.

Ensure error handling and scalability:

Implement proper error handling in your application to handle any potential issues with API requests or responses. Additionally, consider scalability and performance optimizations as your application’s usage grows.

By following these steps, you can get started with the Whisper API and begin leveraging its capabilities for speech synthesis in your applications. OpenAI’s documentation and resources will be valuable references as you explore the intricacies of the API and create compelling voice-enabled experiences for your users.

Exploring the Whisper API Documentation

The Whisper API documentation provides a wealth of information to help you understand and utilize the capabilities of the API effectively. Here’s a guide on how to explore the Whisper API documentation:

Access the documentation:

Visit the OpenAI website and navigate to the documentation section. Look for the section related to the Whisper API. It may be labeled as “Whisper API,” “Text-to-Speech API,” or similar.

Understand the overview:

Start by reading the overview or introduction section of the Whisper API documentation. This section typically provides an overview of the API’s purpose, key features, and use cases. It helps you grasp the fundamentals and sets the context for further exploration.

Learn about API endpoints and request formats:

The documentation should outline the available API endpoints and their functionalities. Take note of the endpoints you can use to make requests for speech synthesis. Understand the request formats, including the required parameters, optional parameters, and the expected data formats for input and output.

Explore example requests and responses:

Look for example requests and responses provided in the documentation. These examples demonstrate how to structure your requests and interpret the returned data. They can serve as practical references to help you understand the API’s behavior and guide your own implementation.

Discover input and output options:

The Whisper API documentation should describe the various options available for customizing the speech synthesis process. Explore the parameters that allow you to control voice selection, speaking rate, pitch, volume, and other aspects of the synthesized speech. Understand how to specify the input text and any additional formatting options.

Understand voice options:

The Whisper API typically offers a range of voice options to choose from. Consult the documentation to learn about the available voices, their languages, and their unique characteristics. Some API documentation may provide voice samples or descriptions to help you make informed choices.

Review usage guidelines and best practices:

Documentation often includes usage guidelines and best practices for working with the Whisper API. Pay attention to any rate limits, usage quotas, or other restrictions mentioned. It’s essential to adhere to these guidelines to ensure a smooth integration and avoid any potential issues.

Check for code samples and SDKs:

Some API documentation may include code samples or software development kits (SDKs) in various programming languages. These resources can accelerate your integration process by providing pre-built code snippets or libraries that handle API interactions.

Explore advanced features:

If you’re looking to dive deeper into the Whisper API, the documentation may cover more advanced features, such as real-time streaming, audio formatting options, or handling long-form text. Take the time to explore these sections if they align with your application’s requirements.

Stay updated:

Keep an eye on the documentation for any updates, new features, or changes to the Whisper API. APIs evolve over time, and OpenAI may introduce enhancements or modifications to improve the functionality or performance. Staying updated ensures you’re leveraging the latest capabilities.

By thoroughly exploring the Whisper API documentation, you’ll gain a solid understanding of the API’s capabilities, endpoints, parameters, and best practices. This knowledge will empower you to effectively integrate the Whisper API into your applications and create compelling voice-enabled experiences for your users.

Related: How To Use Phenaki AI

Best Practices for Using the Whisper API

To make the most of the Whisper API and ensure a smooth integration into your applications, it’s important to follow certain best practices. These practices will help you optimize your usage, enhance the quality of synthesized speech, and ensure a positive user experience. Here are some best practices for using the Whisper API:

Understand usage limits and pricing:

Familiarize yourself with the usage limits, rate limits, and pricing details associated with the Whisper API. OpenAI provides different pricing plans and usage tiers, so be aware of the costs and plan accordingly. Monitoring your API usage and understanding the pricing structure will help you stay within budget and avoid any unexpected charges.

Test and iterate with small requests:

When starting with the Whisper API, it’s advisable to test and iterate with small requests before scaling up. Submitting smaller requests allows you to quickly experiment with different inputs, parameters, and voices. It also helps in understanding the response format and optimizing the speech synthesis process.

Leverage voice customization:

The Whisper API offers various voice customization options such as speaking rate, pitch, and volume. Experiment with these parameters to fine-tune the synthesized speech according to your specific application requirements. Adjusting these settings can help create a more natural and engaging voice experience for your users.

Consider appropriate pauses and pacing:

When generating speech from text, consider adding appropriate pauses and pacing to improve the naturalness and flow of the synthesized speech. By inserting pauses at appropriate points, you can make the speech sound more human-like and enable better comprehension for the listeners.

Handle text preprocessing:

Preprocess your input text, if needed, to ensure optimal results from the Whisper API. Depending on your application, you may want to handle punctuation, abbreviations, or special characters in a way that is suitable for speech synthesis. Clean and well-formatted text can contribute to better speech output.

Implement error handling and retries:

Incorporate robust error handling in your application to handle any potential errors or failures when making API requests. Network issues or temporary service unavailability can occur, so it’s important to implement proper error handling and retry mechanisms to ensure a reliable integration.

Monitor and analyze synthesized speech quality:

Regularly monitor and analyze the quality of the synthesized speech produced by the Whisper API. Pay attention to factors such as pronunciation accuracy, intonation, and overall naturalness. This allows you to identify any areas for improvement and make adjustments to enhance the user experience.

Respect user privacy and data protection:

Ensure that you handle user data and privacy with utmost care and in compliance with relevant regulations. Avoid storing or transmitting sensitive user information unnecessarily. OpenAI provides guidelines on data usage and privacy, so familiarize yourself with those guidelines to ensure compliance.

Stay updated with API changes:

APIs can evolve over time, with new features, improvements, or changes introduced by the API provider. Stay updated with the Whisper API documentation, release notes, and any announcements from OpenAI to take advantage of new capabilities and ensure your integration remains compatible.

Engage with the developer community, forums, or online groups related to the Whisper API. Seek support from fellow developers who may have encountered similar challenges or have valuable insights to share. Additionally, provide feedback to OpenAI regarding your experiences and suggestions for improving the API.

By following these best practices, you can optimize your usage of the Whisper API, improve the quality of synthesized speech, and deliver a seamless voice experience to your application users. Continuously iterating, experimenting, and staying informed about updates will help you make the most of this powerful speech synthesis tool.

Benefits of Using the Whisper API

The Whisper API offers a range of benefits that can enhance your applications and provide a compelling voice experience for your users. Here are some key benefits of using the Whisper API:

High-quality speech synthesis:

The Whisper API leverages state-of-the-art machine learning models to generate high-quality synthesized speech. The voices produced are natural, expressive, and sound similar to human speech. This enables you to create engaging and immersive voice-enabled applications.

Multilingual support:

The Whisper API supports multiple languages, allowing you to synthesize speech in different languages and cater to a global audience. Whether your application requires speech synthesis in English, Spanish, French, German, or other supported languages, the Whisper API can handle it.

Customizable voices:

With the Whisper API, you have the ability to customize the synthesized voices to match your application’s requirements. You can adjust parameters such as speaking rate, pitch, and volume to create unique voice personalities or adapt the speech output to specific contexts.

Versatile applications:

The Whisper API can be used in a wide range of applications and industries. Whether you’re developing voice assistants, interactive voice response (IVR) systems, audiobook narration, accessibility tools, or any other application that requires synthesized speech, the Whisper API can be a valuable tool.

Improved accessibility:

By integrating the Whisper API, you can make your applications more accessible to users with visual impairments or reading difficulties. Text-based content can be transformed into spoken words, enabling users to consume information through audio output and enhancing inclusivity.

Time and cost savings:

Utilizing the Whisper API can save you time and resources compared to recording and managing voiceover files for your applications. Instead of hiring voice actors and recording scripted content, you can dynamically generate speech on-the-fly with the Whisper API, reducing production time and costs.

Scalability and flexibility:

The API infrastructure allows for scalable and reliable speech synthesis. Whether you have a small user base or a large number of concurrent users, the Whisper API can handle the demand. The flexibility of the API also enables you to adapt the synthesized speech to changing requirements or user feedback.

Integration with existing systems:

The Whisper API can be seamlessly integrated into your existing applications, platforms, or workflows. Whether you’re building web applications, mobile apps, or backend systems, you can make API requests to generate speech and incorporate it into your desired user experience.

Future-proof technology:

OpenAI continues to invest in research and development to improve the Whisper API and advance the field of speech synthesis. By utilizing the Whisper API, you benefit from ongoing enhancements and updates, ensuring that your applications stay at the forefront of speech synthesis technology.

Developer-friendly ecosystem:

OpenAI provides comprehensive documentation, code samples, and developer resources to support your integration with the Whisper API. Additionally, there is an active developer community where you can seek assistance, share insights, and collaborate with fellow developers working with the API.

By leveraging the benefits of the Whisper API, you can enhance the voice capabilities of your applications, deliver a more engaging user experience, and unlock new possibilities for communication and accessibility. The API’s high-quality speech synthesis, customization options, and versatility make it a powerful tool for developers across various industries and use cases.

Conclusions

Understand the documentation: Familiarize yourself with the Whisper API documentation, including the overview, endpoints, request formats, and voice customization options. Gain an understanding of the API’s capabilities and how it can be integrated into your application.

Set up your environment: Ensure you have the necessary credentials and access to the Whisper API. Set up your development environment, including any required software libraries or SDKs.

Make API requests: Construct API requests using the appropriate endpoint and request format specified in the documentation. Include the necessary parameters, such as the input text and voice options, to generate synthesized speech.

Handle API responses: Receive and process the API responses, which will contain the synthesized speech in the chosen format (e.g., audio file or raw audio data). Depending on your application, you may need to handle and manipulate the speech output accordingly.

Customize voice settings: Experiment with the voice customization options provided by the Whisper API. Adjust parameters like speaking rate, pitch, and volume to achieve the desired voice characteristics and enhance the naturalness of the synthesized speech.

Iterate and refine: Test and iterate with different inputs, voice settings, and API configurations to refine the speech synthesis process. Continuously evaluate the quality of the synthesized speech and make adjustments as needed.

Implement error handling and retries: Incorporate robust error handling and retry mechanisms in your application to handle any potential errors or failures when interacting with the Whisper API. Ensure your implementation can gracefully handle and recover from network issues or temporary service unavailability.

Monitor usage and performance: Keep track of your API usage and monitor the performance of the Whisper API in your application. Consider implementing logging or analytics to gather insights on usage patterns, user feedback, and the quality of the synthesized speech.

Stay updated: Stay informed about any updates, enhancements, or changes to the Whisper API. Periodically review the documentation and release notes provided by OpenAI to ensure you’re leveraging the latest features and improvements.

Seek support and share feedback: Engage with the developer community or OpenAI’s support channels to seek assistance, share experiences, and provide feedback. By actively participating in the community, you can gain valuable insights and contribute to the ongoing development and improvement of the API.

By following these steps, you can effectively integrate the Whisper API into your applications, leverage its capabilities for speech synthesis, and create engaging voice experiences for your users. Remember to adhere to best practices, respect user privacy, and continuously iterate to optimize the quality and performance of the synthesized speech.

FAQ

Q1: How do I make a request to the Whisper API?

A1: To make a request to the Whisper API, you need to construct a POST request to the appropriate API endpoint . Include the required parameters such as the input text, voice options, and any additional settings. You’ll receive a response containing the synthesized speech in the specified format (e.g., an audio file or raw audio data) that you can handle in your application.

Q2: Can I customize the voice characteristics with the Whisper API?

A2: Yes, the Whisper API allows you to customize the voice characteristics. You can adjust parameters like speaking rate, pitch, and volume to create unique voice personalities or adapt the speech output to specific contexts. By experimenting with these settings, you can fine-tune the synthesized speech to match your application’s requirements.

Q3: What formats of synthesized speech are supported by the Whisper API?

A3: The Whisper API supports different formats for the synthesized speech output. You can choose to receive the speech as an audio file in formats such as WAV or MP3, or you can request the raw audio data as a base64-encoded string. The choice of format depends on your application’s needs and how you plan to handle and use the synthesized speech.

Q4: How can I handle errors and retries when using the Whisper API?

A4: It’s important to implement proper error handling and retries in your application when using the Whisper API. In case of network issues or temporary service unavailability, you can handle errors by checking the response status codes and error messages. Implementing retry mechanisms with exponential backoff can help recover from transient failures and ensure a reliable integration with the API.