How To Build Your Own ChatGPT 2023

In recent years, chatbots have become increasingly popular as a means of enhancing customer support, automating repetitive tasks, and providing personalized user experiences. One of the most advanced and widely used chatbot models is GPT-3 (Generative Pre-trained Transformer 3), developed by OpenAI. GPT-3 is a state-of-the-art language model capable of generating human-like text responses. Building your own chatbot powered by GPT-3 can be an exciting and rewarding project. In this article, we will explore the steps involved in creating your very own ChatGPT.

Understanding GPT-3 and its Capabilities:

- Understanding GPT-3 and its Capabilities:

- Understanding ChatGPT

- The Basics of Building ChatGPT

- Choosing the Right Tools and Frameworks

- Data Collection and Preprocessing

- Model Training and Fine-Tuning

- Deploying Your ChatGPT Model

- Testing and Iterating

- Improving ChatGPT Performance

- Considerations for Scaling ChatGPT

- Conclusion

- FAQs

Before diving into building your chatbot, it’s crucial to grasp the capabilities of GPT-3. GPT-3 has been trained on a massive amount of text data and can generate coherent and contextually relevant responses. It can understand and generate text in multiple languages, answer questions, provide explanations, and even generate creative content like poems or stories. By harnessing the power of GPT-3, you can create a chatbot that engages users in natural and dynamic conversations.

Defining the Purpose and Scope of Your Chatbot:

The first step in building your own ChatGPT is to clearly define the purpose and scope of your chatbot. Ask yourself: What problem will your chatbot solve? Will it assist customers with inquiries, provide recommendations, or offer a personalized experience? Understanding the specific goals of your chatbot will guide you in designing its conversational flow and determining the type of interactions it needs to handle.

Gathering Training Data:

To train your chatbot effectively, you’ll need a diverse and high-quality dataset. This data will be used to fine-tune GPT-3 and teach it to generate appropriate responses. You can start by collecting conversational data related to your chatbot’s intended purpose. This data can be sourced from customer support chats, forum discussions, or any relevant text corpus. Additionally, you can augment your dataset with prompts and responses to create a more focused and tailored chatbot.

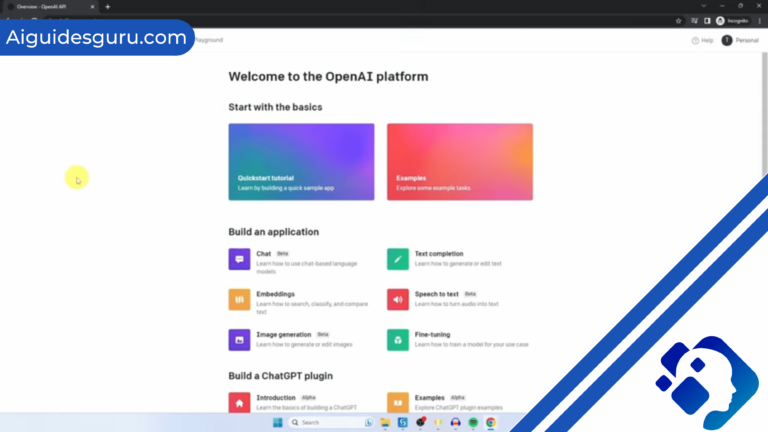

Integrating with the OpenAI API:

Once you have prepared your training data, you can integrate your chatbot with the OpenAI API. The API allows you to interact with GPT-3 and send prompts to generate responses. OpenAI provides detailed documentation and code examples to help you get started. By leveraging the API, you can unleash the power of GPT-3 and enable your chatbot to generate human-like responses in real-time.

Understanding ChatGPT

GPT-3, developed by OpenAI, is an advanced language model that has revolutionized the field of natural language processing. It is the driving force behind many popular chatbots, including ChatGPT. To build your own ChatGPT, it is crucial to understand the capabilities and workings of GPT-3. Let’s delve deeper into the key aspects of this impressive language model.

Language Generation and Understanding:

GPT-3 is designed to generate human-like text responses based on the provided prompts. It can understand and process natural language input, making it ideal for chatbot applications. The model has been trained on a vast corpus of text from various sources, enabling it to generate coherent and contextually relevant responses. Whether it’s answering questions, providing explanations, or engaging in conversations, GPT-3 excels at mimicking human-like language patterns.

Contextual Understanding:

One of the remarkable features of GPT-3 is its ability to understand and maintain context throughout a conversation. It can remember and refer back to previous messages or prompts, allowing for more coherent and meaningful interactions. This contextual understanding enables ChatGPT to provide more accurate responses and engage users in dynamic conversations.

Multilingual Support:

GPT-3 is proficient in multiple languages, making it a versatile tool for building chatbots that cater to a global audience. Whether your target users speak English, Spanish, French, or any other supported language, you can leverage GPT-3 to create a multilingual chatbot that communicates seamlessly across different linguistic boundaries.

Creative Content Generation:

Beyond its conversational abilities, GPT-3 can also generate creative content. It can compose poems, write stories, and even generate code snippets. This opens up exciting possibilities for expanding the functionality of your ChatGPT beyond mere chat-based interactions. You can explore incorporating creative features into your chatbot to provide users with a unique and engaging experience.

Ethical Considerations:

While GPT-3 demonstrates impressive language generation capabilities, it’s essential to be aware of potential ethical concerns. The model is trained on large amounts of data from the internet, which means it can inadvertently generate biased or inappropriate responses. It’s crucial to implement robust moderation and filtering mechanisms to ensure that your chatbot adheres to ethical guidelines and provides a safe and inclusive user experience.

Understanding the nuances and intricacies of GPT-3 is fundamental to building a successful ChatGPT. By harnessing its language generation and understanding capabilities, leveraging its contextual understanding, exploring multilingual support, and being mindful of ethical considerations, you can create a powerful and user-friendly chatbot that enhances user engagement and satisfaction.

The Basics of Building ChatGPT

Building your own ChatGPT involves a systematic approach that encompasses several key steps. In this section, we will explore the fundamental aspects of constructing a ChatGPT chatbot that delivers an exceptional user experience.

Defining the Purpose and Scope:

Before diving into the technical implementation, it’s crucial to define the purpose and scope of your ChatGPT. What problem or need will your chatbot address? Will it provide customer support, offer recommendations, or engage users in casual conversations? Clearly defining the goals and intended functionalities of your chatbot will guide the entire development process.

Choosing the Right Framework or Platform:

To build ChatGPT, you need a suitable framework or platform that supports language models like GPT-3. Several frameworks, libraries, and platforms are available that streamline the integration and deployment of chatbots. Popular options include TensorFlow, PyTorch, and the OpenAI API. Evaluate the features, documentation, and community support of different options to select the one that best aligns with your project requirements.

Data Collection and Preprocessing:

To train your ChatGPT, you’ll need a dataset that comprises conversational data relevant to your chatbot’s purpose. This data can be collected from various sources, such as customer support chats, online forums, or publicly available chat logs. Preprocess the dataset by cleaning and formatting the text, ensuring it aligns with the input format expected by your chosen framework or platform.

Fine-tuning the Language Model:

To make your ChatGPT more specific and attuned to your desired use case, fine-tuning is crucial. Fine-tuning involves training the pre-trained GPT-3 model on your specific dataset. This process helps the model adapt to the desired conversational style and domain. Follow the guidelines provided by the framework or platform you’ve chosen to fine-tune the model effectively.

Designing the Conversational Flow:

The conversational flow determines how your ChatGPT engages with users. Design a coherent and logical flow that allows for smooth interactions. Consider the different user inputs and define the corresponding responses or actions your chatbot will provide. Incorporate decision-making mechanisms, such as rules or machine learning algorithms, to handle complex scenarios and guide the conversation effectively.

Testing and Iteration:

Testing is a vital step in the development of ChatGPT. Engage in thorough testing to ensure the chatbot performs as intended and delivers accurate and meaningful responses. Test the chatbot’s ability to handle different user queries, edge cases, and potential challenges. Collect feedback from users and iterate on the model and the conversational flow to continually improve the user experience.

By following these basic building steps, you can develop a functional and engaging ChatGPT chatbot. Remember to monitor and analyze user interactions to gather insights and make iterative improvements. Building a successful chatbot takes time and effort, but with the right approach, you can create a powerful tool that caters to the needs of your users.

Related: How To Use Slides AI

Choosing the Right Tools and Frameworks

When building your ChatGPT chatbot, selecting the right tools and frameworks is crucial for a smooth and efficient development process. In this section, we will explore some essential considerations to help you choose the most suitable tools and frameworks for your project.

Frameworks for Language Models:

To work with GPT-3, you can leverage frameworks and libraries that provide pre-trained language models and support for fine-tuning. Popular options include TensorFlow, PyTorch, and Hugging Face’s Transformers library. These frameworks offer extensive documentation, community support, and pre-built components that simplify the integration of GPT-3 into your chatbot application.

Natural Language Processing (NLP) Libraries:

NLP libraries are essential for processing and understanding user inputs and generating appropriate responses. Libraries like NLTK (Natural Language Toolkit) and spaCy provide a wide range of NLP functionalities, including tokenization, part-of-speech tagging, named entity recognition, and more. These libraries can enhance the language understanding capabilities of your ChatGPT chatbot.

Conversational AI Platforms:

If you prefer a more streamlined approach, you can consider using conversational AI platforms that provide comprehensive tools and infrastructure for building chatbots. Platforms like Dialogflow, IBM Watson Assistant, and Microsoft Bot Framework offer intuitive interfaces, natural language understanding capabilities, and integrations with popular messaging platforms. These platforms can simplify the development and deployment of your ChatGPT chatbot.

Cloud Services:

When deploying your ChatGPT chatbot, cloud services provide the scalability and reliability necessary to handle user interactions effectively. Cloud providers like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure offer various services, such as virtual machines, serverless computing, and managed databases. These services ensure your chatbot can handle high traffic and provide a seamless user experience.

Version Control and Collaboration Tools:

Effective collaboration and version control are essential when working on a chatbot project. Tools like Git and GitHub enable version control, allowing multiple developers to work on the codebase simultaneously. Collaborative platforms like Slack or Microsoft Teams facilitate communication and knowledge sharing among team members, ensuring a smooth development process.

Analytics and Monitoring Tools:

To gain insights into user interactions and improve your ChatGPT chatbot over time, it’s important to integrate analytics and monitoring tools. Services like Google Analytics, Mixpanel, or custom-built analytics solutions can help you track user engagement, identify bottlenecks, and gather valuable feedback. Monitoring tools like New Relic or Datadog ensure the performance and availability of your chatbot.

When selecting tools and frameworks, consider factors such as the complexity of your project, the expertise of your development team, the required scalability, and your budget. Additionally, explore the documentation, community support, and availability of pre-built components or libraries that align with your specific needs.

By carefully choosing the right tools and frameworks, you can streamline the development process and create a robust and efficient ChatGPT chatbot that meets the expectations of your users.

Data Collection and Preprocessing

Collecting and preprocessing data is a crucial step in training your ChatGPT chatbot effectively. High-quality and relevant data directly influence the performance and accuracy of your chatbot’s responses. In this section, we will explore the importance of data collection and the key steps involved in data preprocessing for your ChatGPT chatbot.

Data Collection:

The first step is to gather a dataset that consists of conversational data relevant to your chatbot’s purpose. Depending on your specific use case, you can collect data from various sources such as customer support chats, online forums, chat logs, or even user interactions with existing chatbots. It’s important to ensure the dataset covers a wide range of topics and user queries to provide a diverse training experience.

Data Filtering and Cleaning:

Once you have collected the dataset, it’s essential to filter and clean the data to remove noise, irrelevant information, or any sensitive or personally identifiable information (PII). This step helps ensure the quality and integrity of the training data. You can use techniques like regular expressions, keyword filtering, or custom rules to filter out unwanted content. Additionally, you may need to anonymize or redact any PII present in the dataset.

Formatting and Tokenization:

To train your ChatGPT chatbot, the dataset needs to be formatted and tokenized appropriately. Tokenization involves breaking down the text into smaller units such as words or subwords, which makes it easier for the language model to process and understand. Many NLP libraries, such as NLTK or spaCy, provide tokenization functions that you can leverage. Ensure that the tokenization scheme aligns with the requirements of your chosen framework or platform.

Dataset Balancing:

In some cases, your collected dataset may have an imbalance in the distribution of topics or user queries. It’s important to address this issue by balancing the dataset to ensure fair representation of different topics and scenarios. You can manually add more examples or use data augmentation techniques to create additional instances for underrepresented topics. Balancing the dataset helps your chatbot provide accurate responses across various user queries.

Splitting the Dataset:

To train and evaluate your ChatGPT chatbot effectively, it’s essential to split the dataset into training, validation, and testing sets. The training set is used to train the model, the validation set is used to fine-tune and improve the model’s performance, and the testing set is used to assess the final performance of the chatbot. Typically, an 80-10-10 split is used, but the exact ratio can vary based on the size of the dataset and specific requirements.

Data Augmentation (Optional):

Consider augmenting your dataset by generating additional synthetic examples. Data augmentation techniques such as backtranslation, paraphrasing, or word replacement can help increase the diversity and size of your dataset. This can be particularly useful when working with limited data or to improve the generalization capabilities of your chatbot.

By following these data collection and preprocessing steps, you can ensure that your ChatGPT chatbot is trained on high-quality data, leading to more accurate and contextually relevant responses. Remember to continuously update and refine your dataset as your chatbot interacts with users and collects new conversational data.

Model Training and Fine-Tuning

Training and fine-tuning the language model is a crucial step in customizing your ChatGPT chatbot to meet your specific requirements. Fine-tuning allows you to adapt the pre-trained GPT-3 model to the conversational style and context of your chatbot. In this section, we will explore the process of model training and fine-tuning for your ChatGPT chatbot.

Pre-trained Models:

To begin, you’ll need access to a pre-trained language model like GPT-3. OpenAI provides access to the GPT-3 model, which serves as an excellent starting point for training your ChatGPT chatbot. The pre-trained model has already learned from a vast amount of data and possesses a general understanding of language and context.

Dataset Preparation:

Prepare your dataset by following the data collection and preprocessing steps outlined in the previous section. Ensure that your dataset is cleaned, formatted, and tokenized appropriately. This dataset will serve as the training data for fine-tuning the language model.

Fine-tuning Process:

Fine-tuning involves training the pre-trained GPT-3 model on your custom dataset to adapt it to your chatbot’s specific conversational style and domain. The process typically involves several iterations, with each iteration consisting of the following steps:

- Initialize the model: Load the pre-trained GPT-3 model and set it up for fine-tuning.

- Define the training objective: Specify the objective function that the model will optimize during training. This can be a language modeling objective, a ranking objective, or a combination of several objectives depending on your specific use case.

- Training the model: Train the model on your dataset by feeding the input text and optimizing the model’s parameters using techniques like backpropagation and gradient descent. The training process involves adjusting the model’s weights to minimize the difference between the model’s predicted responses and the desired responses from the dataset.

- Evaluate and iterate: Evaluate the performance of the fine-tuned model using validation data. Analyze metrics such as perplexity or accuracy to assess the model’s quality. Iterate on the fine-tuning process by modifying hyperparameters, adjusting the training data, or incorporating additional techniques to improve performance.

Hyperparameter Tuning:

During the fine-tuning process, you may need to tune hyperparameters to optimize the model’s performance. Hyperparameters include learning rate, batch size, number of training iterations, and regularization techniques. Experiment with different values for these hyperparameters to find the optimal configuration that yields the best results for your chatbot.

Monitoring and Validation:

Continuously monitor the performance of the fine-tuned model during training and fine-tuning iterations. Use the validation set to evaluate the model’s responses and identify any issues or areas for improvement. Monitoring and validating the model help ensure that it is learning effectively and generating accurate and contextually relevant responses.

Deployment and User Feedback:

Once you are satisfied with the performance of the fine-tuned model, deploy it in a production environment for user interactions. Collect user feedback and monitor the chatbot’s performance in real-world scenarios. User feedback can provide valuable insights for further improvements and iterative fine-tuning of the model.

Remember that the fine-tuning process is an iterative one, and it may require multiple rounds of training, evaluation, and refinement to achieve optimal results. Patience and careful analysis of the model’s performance are essential to create a ChatGPT chatbot that delivers accurate and engaging conversations.

Related: How To Use Whisper API

Deploying Your ChatGPT Model

Once you have trained and fine-tuned your ChatGPT model, the next step is to deploy it, making it available for users to interact with. In this section, we will explore the key considerations and options for deploying your ChatGPT model effectively.

Deployment Options:

There are several deployment options available for your ChatGPT model, depending on your specific requirements and resources:

- Cloud-based Deployment: Deploying your model on a cloud platform like Amazon Web Services (AWS), Google Cloud, or Microsoft Azure provides scalability, reliability, and easy management. You can leverage platform-specific services like AWS Lambda, Google Cloud Functions, or Azure Functions for serverless deployment, or use virtual machines for more control.

- On-Premises Deployment: If you prefer to deploy your model on your own infrastructure, you can set up and manage your servers or use containerization technologies like Docker to encapsulate your model and its dependencies.

- API-based Deployment: Another option is to expose your ChatGPT model as an API, allowing other applications or services to interact with it. You can use frameworks like Flask or FastAPI to develop a RESTful API that receives user inputs and returns model-generated responses.

Scalability and Performance:

Consider the scalability and performance requirements of your ChatGPT model. If you anticipate high user traffic and a large number of concurrent interactions, you need to ensure your deployment strategy can handle the load effectively. Scaling options such as auto-scaling groups, load balancers, and caching mechanisms can help distribute the workload and improve response times.

Security Considerations:

When deploying your ChatGPT model, pay attention to security considerations. Protect sensitive user data, adhere to privacy regulations, and implement authentication and authorization mechanisms to control access to your API or deployment infrastructure. Encrypting network communication and ensuring secure storage of data are also important aspects to consider.

Monitoring and Logging:

Implement monitoring and logging mechanisms to track the performance and usage of your deployed ChatGPT model. Services like AWS CloudWatch, Google Cloud Monitoring, or open-source tools like Prometheus and Grafana can provide insights into resource utilization, response times, error rates, and other important metrics. Monitoring helps you identify and address issues promptly and optimize the performance of your chatbot.

Continuous Integration and Deployment (CI/CD):

Adopting CI/CD practices can streamline the deployment process and enable faster iterations. Use version control systems like Git to manage your codebase, and set up automated pipelines for building, testing, and deploying your ChatGPT model. Continuous integration ensures that any code changes undergo proper testing, while continuous deployment automates the deployment process, reducing manual effort and potential errors.

User Experience and Integration:

Consider the user experience (UX) of your ChatGPT chatbot and how it integrates with different platforms. Ensure that the chatbot seamlessly integrates with your desired messaging channels, such as websites, mobile apps, or popular messaging platforms like Facebook Messenger or Slack. Design an intuitive and user-friendly interface that guides users through the conversation flow and provides clear instructions and feedback.

Deploying your ChatGPT model effectively requires a well-planned strategy that considers scalability, security, monitoring, and integration aspects. Regularly monitor the performance of your deployed chatbot, collect user feedback, and iterate on improvements to enhance the user experience and optimize the model’s responses.

Testing and Iterating

Testing and iterating are crucial steps in the development and maintenance of your ChatGPT chatbot. These steps help ensure that your chatbot performs accurately, provides relevant responses, and continuously improves over time. In this section, we will explore the key considerations for testing and iterating your ChatGPT chatbot effectively.

Test Strategy:

Develop a comprehensive test strategy to evaluate the performance and behavior of your ChatGPT chatbot. Consider the following types of tests:

- Unit Testing: Test individual components or functions of your chatbot to ensure they work as expected. This can include testing preprocessing functions, response generation, or any specific modules you have developed.

- Integration Testing: Test the integration of different components of your chatbot to ensure they work together seamlessly. This can involve testing the interaction between the language model, input handling, and response generation.

- Functional Testing: Test the functional requirements of your chatbot by providing various inputs and verifying that the generated responses are accurate and contextually relevant.

- User Experience Testing: Evaluate the user experience of your chatbot by simulating user interactions and assessing factors such as response time, conversational flow, and error handling.

- Edge Case Testing: Test your chatbot with challenging or unusual inputs to ensure it can handle unexpected user queries or scenarios gracefully.

User Feedback and Iteration:

Collect user feedback to understand how your ChatGPT chatbot performs in real-world scenarios. Encourage users to provide feedback on the chatbot’s responses, usability, and any issues they encounter. Analyze this feedback to identify improvement areas and iterate on your chatbot’s responses or underlying model.

Continuous Training and Fine-Tuning:

As you gather user feedback and identify areas for improvement, consider incorporating new data into your training pipeline. Continuously train and fine-tune your ChatGPT model using the latest feedback and data to enhance its performance and make it more contextually aware.

A/B Testing:

Conduct A/B testing to compare different versions or variations of your chatbot. Deploy multiple variants and compare their performance based on metrics such as user satisfaction, completion rates, or accuracy. A/B testing helps you identify the most effective changes or improvements to apply to your chatbot.

Version Control and Rollbacks:

Implement version control for your chatbot’s codebase and models. This allows you to track changes, revert to previous versions if needed, and maintain a history of improvements. Version control also facilitates collaboration among team members working on the chatbot’s development.

Regular Maintenance and Updates:

Regularly maintain and update your ChatGPT chatbot to address issues, incorporate new features, or adapt to evolving user needs. Monitor the chatbot’s performance, analyze usage patterns, and proactively identify areas for improvement. Keep track of the latest advancements in NLP and AI technologies to leverage them for enhancing your chatbot’s capabilities.

Testing and iterating are ongoing processes that ensure the continuous improvement and effectiveness of your ChatGPT chatbot. By incorporating user feedback, regularly training and fine-tuning the model, and maintaining a rigorous testing strategy, you can create a chatbot that provides accurate, contextually relevant, and engaging conversations with users.

Improving ChatGPT Performance

While ChatGPT is a powerful language model, there are several techniques you can employ to improve its performance and enhance the quality of its responses. In this section, we will explore some strategies to optimize and refine your ChatGPT chatbot.

Dataset Quality:

The quality of your training dataset plays a significant role in shaping the performance of your ChatGPT model. Ensure that your dataset is diverse, representative, and well-prepared. Include a wide range of conversational examples, covering different topics, user intents, and linguistic variations. Clean and preprocess the dataset to remove noise, errors, or irrelevant content that can negatively impact the model’s training.

Dataset Size:

The size of your training dataset can also impact the performance of your ChatGPT model. Generally, larger datasets tend to yield better results. If possible, consider expanding your dataset by collecting more conversational data or incorporating relevant publicly available datasets. However, be mindful of data quality and avoid including low-quality or biased data.

Problem Formulation:

Clearly define the problem you want your ChatGPT chatbot to solve. Formulate the problem as a conversational task with specific objectives. This clarity helps the model understand the context and generate more relevant responses. For example, if you are building a customer support chatbot, define the problem as providing accurate and helpful responses to customer queries.

Context Window:

ChatGPT has a limited context window, which means it may not consider the entire conversation history when generating responses. To improve performance, you can truncate or summarize the conversation history to provide the most relevant context to the model. Experiment with different context window sizes to find the optimal balance between context and response quality.

System Messages and User Prompts:

System messages are used to guide the behavior of the chatbot, while user prompts set the context for the user’s query. Craft clear and explicit system messages and user prompts to guide the model’s responses effectively. Experiment with different wording and formatting styles to achieve the desired behavior and improve the chatbot’s conversational flow.

Response Length:

By default, ChatGPT has a maximum token limit per response. If you encounter issues with long or cut-off responses, you can experiment with truncating or summarizing the generated text to ensure coherent and concise answers. Be mindful of maintaining the necessary information and context in the response while adhering to the token limit.

Reinforcement Learning:

Reinforcement learning (RL) techniques can be used to fine-tune and improve the behavior of your ChatGPT chatbot. RL involves training the model using feedback from human evaluators or simulated users. By providing rewards or penalties based on the quality of responses, you can guide the model to generate more desirable and appropriate replies.

Human-in-the-Loop:

Incorporate human-in-the-loop mechanisms to validate and curate the responses generated by your ChatGPT chatbot. Have human reviewers evaluate and rate the model’s responses, providing feedback and guidance. This iterative feedback loop helps refine the model’s performance over time and ensures high-quality responses.

User Feedback Loop:

Encourage users to provide feedback on the chatbot’s responses. Implement mechanisms for users to rate the responses or report any issues encountered. Analyze this feedback to identify recurring problems or areas for improvement. Actively engage with user feedback to continuously enhance the performance and user experience of your chatbot.

Regular Updates and Iterations:

Continuously iterate and update your ChatGPT chatbot to address limitations, incorporate new data, and improve its performance. Stay up to date with the latest advancements in natural language processing (NLP) research and techniques. Explore research papers, attend conferences, and participate in the NLP community to leverage cutting-edge approaches for further enhancing your chatbot.

Remember that improving ChatGPT performance is an ongoing process that requires experimentation, analysis, and iteration. By applying these strategies and continuously refining your model, you can create a ChatGPT chatbot that delivers accurate, relevant, and engaging conversations.

Related: How To Write An AI Character

Considerations for Scaling ChatGPT

Scaling ChatGPT involves preparing your chatbot to handle increased user demand and ensuring its performance remains optimal as the user base grows. In this section, we will explore some key considerations for effectively scaling your ChatGPT chatbot.

Infrastructure Scaling:

To handle increased user traffic, you need to scale the underlying infrastructure supporting your ChatGPT chatbot. Consider the following options:

- Horizontal Scaling: Scale horizontally by adding more servers or instances to distribute the workload. This can involve using load balancers to evenly distribute incoming requests across multiple instances.

- Vertical Scaling: Scale vertically by upgrading the resources (CPU, memory) of your existing servers or instances to handle increased demand. This may involve migrating to more powerful machines or increasing the capacity of your cloud instances.

- Auto-Scaling: Implement auto-scaling capabilities that automatically adjust the number of instances based on the current demand. Cloud platforms like AWS, Google Cloud, and Azure provide auto-scaling features that can dynamically scale your infrastructure up or down based on predefined rules or metrics.

- Caching: Implement caching mechanisms to reduce the load on your ChatGPT model. Caching can store frequently accessed data or responses, allowing them to be served quickly without invoking the model for every request.

Performance Optimization:

Optimize the performance of your ChatGPT chatbot to ensure fast response times and efficient resource utilization. Consider the following techniques:

- Batch Processing: Instead of making individual API calls for every user request, batch multiple requests together and process them simultaneously. This can help reduce overhead and improve efficiency.

- Asynchronous Processing: Use asynchronous processing to handle multiple requests concurrently. This allows your chatbot to process multiple user interactions simultaneously, improving overall throughput.

- Response Caching: Cache the generated responses for common user queries to avoid redundant computations. This can significantly reduce response times for frequently asked questions or popular queries.

- Optimization Frameworks: Leverage optimization frameworks like TensorFlow or PyTorch to optimize and accelerate the model’s inference process. Techniques such as model quantization, pruning, or model compression can help reduce the model’s size and improve inference speed.

Monitoring and Alerting:

Implement robust monitoring and alerting systems to track the performance and health of your ChatGPT chatbot. Monitor key metrics like response times, error rates, resource utilization, and server health. Set up alerts to notify you when predefined thresholds are exceeded or when anomalies are detected. Monitoring and alerting systems help you identify and address performance issues proactively.

Load Testing:

Conduct thorough load testing to simulate high user traffic and identify potential bottlenecks or performance limitations. Load testing involves subjecting your chatbot to a significant number of concurrent interactions to assess its behavior under peak loads. By identifying bottlenecks and addressing them before scaling, you can ensure a smooth user experience as your chatbot handles increased traffic.

Database Optimization:

If your chatbot relies on a database for storing user information or maintaining conversation history, optimize the database performance for scalability. Implement techniques such as indexing, query optimization, and database sharding to ensure efficient data retrieval and storage.

Fault Tolerance and Redundancy:

Ensure that your infrastructure is fault-tolerant and has redundancy mechanisms in place. Distributed systems, data replication, and backup strategies can help mitigate single points of failure and ensure high availability. Implement disaster recovery plans and backup procedures to safeguard your chatbot’s data and infrastructure.

User Feedback and Iteration:

Continuously gather user feedback and iterate on your ChatGPT chatbot to address performance concerns and usability issues. User feedback can provide valuable insights into areas that need improvement as you scale your chatbot. Regularly analyze user feedback, monitor user satisfaction, and make iterative enhancements to maintain a high-quality user experience.

Scaling your ChatGPT chatbot requires careful planning, infrastructure optimization, and performance tuning. By considering these scaling considerations and continuously refining your chatbot, you can ensure that it can handle increased user demand while delivering a seamless and efficient conversational experience.

Conclusion

Building your own ChatGPT involves several key steps and considerations. Here is a summary of the process:

Data Collection: Gather a diverse and representative dataset of conversational data. This can be done through various sources, such as online forums, customer interactions, or publicly available datasets.

Data Preprocessing: Clean and preprocess the collected data to remove noise, correct errors, and ensure consistency. This may involve tasks like text normalization, tokenization, and removing personally identifiable information (PII).

Model Training: Utilize a pre-trained language model like GPT-3 or GPT-4 as a starting point. Fine-tune the model on your conversational dataset using techniques like transfer learning or domain adaptation. This step helps the model learn the nuances of conversational language.

Model Evaluation: Evaluate the performance of your trained ChatGPT model using appropriate metrics and validation techniques. This involves assessing the model’s ability to generate coherent, contextually relevant, and accurate responses.

Deployment: Choose a deployment option that suits your requirements, such as cloud-based deployment on platforms like AWS, Google Cloud, or Microsoft Azure. Consider scalability, security, and integration aspects while deploying your ChatGPT model.

Testing and Iterating: Develop a comprehensive testing strategy to evaluate the performance and behavior of your ChatGPT chatbot. Collect user feedback, conduct A/B testing, and continuously iterate on your chatbot to enhance its responses and user experience.

Ethical Considerations: Be mindful of ethical considerations such as bias, fairness, privacy, and transparency when developing and deploying your ChatGPT chatbot. Regularly monitor and address any ethical concerns that may arise.

Building your own ChatGPT requires a combination of data preparation, model training, deployment, testing, and ethical considerations. It is an iterative process that involves continuous improvement and refinement based on user feedback and evolving user needs.

Remember that implementing ChatGPT effectively requires a solid understanding of natural language processing, machine learning, and software engineering principles. Keeping up with the latest research and advancements in the field will also help you enhance the capabilities of your ChatGPT chatbot.

By following these steps and considering the best practices discussed throughout this guide, you can build and deploy your own ChatGPT chatbot that provides engaging and contextually relevant conversations with users.

FAQs

Q1: How can I gather data for training my own ChatGPT model?

A1: You can collect conversational data from various sources such as online forums, customer interactions, or publicly available datasets. Ensure that the data is diverse and representative of the conversations you want your chatbot to handle.

Q2: Do I need to preprocess the data before training my ChatGPT model?

A2: Yes, data preprocessing is an essential step. Clean and preprocess the collected data to remove noise, correct errors, and ensure consistency. Tasks like text normalization, tokenization, and removing personally identifiable information (PII) are typically performed during this stage.

Q3: How do I train my ChatGPT model?

A3: Start with a pre-trained language model like GPT-3 or GPT-4. Fine-tune the model on your conversational dataset using techniques like transfer learning or domain adaptation. This process helps the model learn the specific nuances of conversational language.

Q4: What are the ethical considerations when building my own ChatGPT?

A4: It’s important to be mindful of ethical considerations. Take steps to address bias, promote fairness, protect user privacy, and ensure transparency in your chatbot’s behavior. Regularly monitor and address any ethical concerns that may arise during the development and deployment of your ChatGPT chatbot.