How to Fine-Tune ChatGPT: A Comprehensive Guide

Fine-tuning an AI model like ChatGPT allows developers and businesses to customize its responses to better suit their specific needs. With the ability to train a personalized model, you can improve the quality of the answers and reduce latency in your applications. In this article, we will explore the step-by-step process of fine-tuning ChatGPT, gathering and formatting the dataset, running the fine-tuning process, and assessing the performance of the trained model.

Gathering and Preparing the Dataset

Before you begin the fine-tuning process, you need to gather a dataset that represents your specific scenario. This dataset should contain hundreds or thousands of examples relevant to your use case. You can use tools like Apache Spark or other MapReduce frameworks to assist in formatting the data. The dataset should be saved in a supported format such as CSV, TSV, XLSX, JSON, or JSONL.

Once you have your dataset, it’s important to sanitize the data by removing any duplicates or irrelevant information. OpenAI provides a tool to transform your dataset into the JSONL format, which is the format required for fine-tuning. This tool analyzes your dataset and suggests actions such as removing duplicates, adding separators, or adding whitespace characters to improve the training process.

Additionally, it’s recommended to split the dataset into training and validation sets. The training set, which consists of around 95% of the examples, will be used for the fine-tuning process. The validation set, comprising around 5% of the examples, will be used to assess the performance of the trained model.

Prerequisites for Fine-Tuning

Before you can proceed with fine-tuning, you need to install the OpenAI Python library using pip. This library provides the necessary functions and tools for interacting with the OpenAI API. Additionally, you must set your OpenAI API key as an environment variable to authorize commands to OpenAI on behalf of your account.

Formatting the Dataset

To format your dataset into the required JSONL format, you can use the OpenAI tools provided. The command openai tools fine_tunes.prepare_data takes your dataset file as input and transforms it into the JSONL format. If the command fails due to missing dependencies, make sure to install the required dependency using the pip install

The formatting process will analyze your dataset and provide recommendations for fine-tuning. It’s advisable to apply all the recommended actions unless you have a specific reason not to do so. The command will also generate separate training and validation files based on the split action, which will be used in the subsequent steps of the fine-tuning process.

Running the Fine-Tuning Process

Now that your dataset is properly formatted, you can proceed with the fine-tuning process. Fine-tuning is a paid functionality, and the price varies based on the size of your dataset and the base model you choose for training. You can run the suggested command provided by the OpenAI tools or customize it according to your requirements.

The fine-tuning command includes various options that allow you to specify the training and validation files, the base model to use, and other settings. For example, you can choose from base models like “ada,” “babbage,” “curie,” or “davinci,” each offering different performance and accuracy characteristics. You can also set options for classification problems or multiclass classification if your scenario requires it.

Once you execute the fine-tuning command, it will upload the dataset files to the OpenAI servers, and the fine-tuning process will begin. The duration of the process depends on the size of your dataset and the chosen base model. You can check the progress of the fine-tuning job by using the command openai api fine_tunes.get -i

Utilizing the Fine-Tuned Model

Once the fine-tuning process is complete, you will receive a fine-tuned model ID that you can use to interact with the trained model. To utilize the fine-tuned model, you can set the -m option with the ID when sending prompts to the OpenAI API. This allows you to receive responses from the fine-tuned model tailored to your specific scenario.

You can also use the fine-tuned model in the OpenAI playground, where you can experiment, test, and assess its performance. Comparing the responses of the fine-tuned model with the base ChatGPT model will help you evaluate the improvements achieved through fine-tuning.

Assessing the Performance

To assess the performance of your fine-tuned model, you can use the OpenAI tools to retrieve the results of the fine-tuning process. The command openai api fine_tunes.results -i <FINE_TUNING_ID> will provide you with a CSV file containing valuable information about the training and validation steps. This information includes training loss, training accuracy, validation loss, and other metrics that help evaluate the model’s performance.

Conclusion

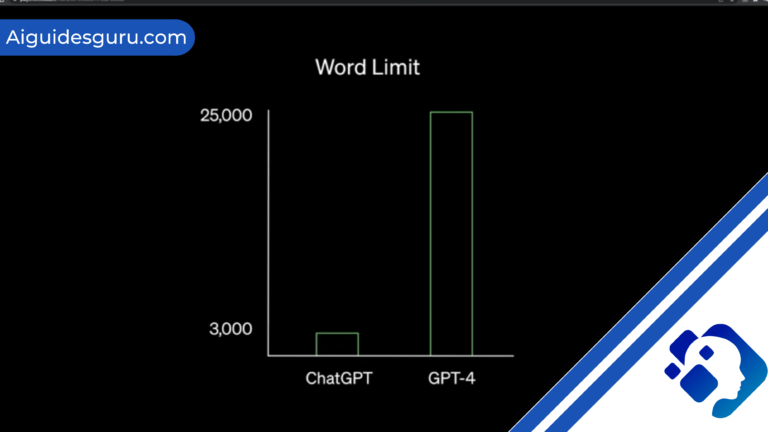

OpenAI is continuously developing new features and enhancements for fine-tuning models like ChatGPT. They are expected to release fine-tuning for GPT-4.0 in the near future, along with additional improvements such as support for function calling and the ability to fine-tune via the user interface. These advancements expand the possibilities for developers and businesses to further customize and optimize their AI models.

In conclusion, fine-tuning ChatGPT allows developers and businesses to create personalized models that better fit their specific use cases. By following the step-by-step process outlined in this article, you can gather and prepare your dataset, run the fine-tuning process, and utilize the fine-tuned model to improve the quality and performance of your AI applications. Fine-tuning opens up endless possibilities for customization and optimization, enabling more efficient and tailored interactions between humans and AI.

FAQs

Can I fine-tune ChatGPT for multilingual scenarios?

Yes, you can fine-tune ChatGPT to handle different languages by providing a dataset that includes examples in the desired language. This allows the model to generate responses specific to that language.

What are the benefits of fine-tuning ChatGPT?

Fine-tuning ChatGPT offers several advantages, including improved steerability, more reliable output formatting, and the ability to customize the tone of the responses. These benefits enhance the model’s performance and make it more adaptable to specific requirements.

What are the benefits of fine-tuning ChatGPT?

Fine-tuning ChatGPT offers several advantages, including improved steerability, more reliable output formatting, and the ability to customize the tone of the responses. These benefits enhance the model’s performance and make it more adaptable to specific requirements.

Is fine-tuning ChatGPT a paid feature?

Yes, fine-tuning ChatGPT is a paid functionality. The cost depends on factors such as the size of your dataset and the base model used for training. OpenAI provides detailed pricing information on their website to help you estimate the cost of fine-tuning your models.

By following the guidelines and steps outlined in this article, you can fine-tune ChatGPT to create a customized AI model that meets your specific needs. With improved steerability, reliable output formatting, and the ability to customize the tone, you can enhance the performance and usability of your AI applications. Embrace the power of fine-tuning and unlock the full potential of ChatGPT for your business or project.